The dynamic, pay-as-you-go nature of the AWS Cloud gives you the opportunity to build systems that respond gracefully to changes in load while paying only for the compute, storage, network, database, and other resources that you actually consume.

Over the last couple of years, as our customer base has become increasingly sophisticated and cloud-aware, we have been working to provide equally sophisticated tools for viewing and managing costs. Many enterprises use AWS for multiple projects, often spread across multiple departments and billed directly or through linked accounts.

In the usual budget-centric environment found in an enterprise, no one likes a surprise (except if it is an AWS price reduction). Our goal is to give you a broad array of cost management tools that will provide you with the information that you need to have in order to know what you are currently spending and how much you can expect to spend in the future. We also want to make sure that you have an early warning if costs exceed your expectations for some reason.

We launched the Cost Explorer last year. This tool integrates with the AWS Billing Console and gives you reporting, analytics, and visualization tools to help you to track and manage your AWS costs.

New Budgets and Forecasts

Today we are adding support for budgets and forecasts. You can now define and track budgets for your AWS costs, forecast your AWS costs for up to three months out, and choose to receive email notification when actual costs exceed or are forecast to exceed budget costs.

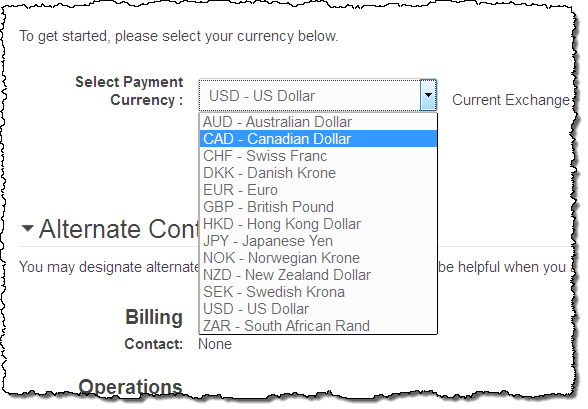

Budgeting and forecasting takes place on a fine-grained basis, with filtering or customization based on Availability Zone, Linked Account, API operation, Purchase Option (e.g. Reserved), Service, and Tag.

The operations provided by these new tools replace the tedious and time-consuming manual calculations that many of our customers (both large and small) have been performing as part of their cost management and budgeting process. After running a private beta with over a dozen large-scale AWS customers, we are confident that these tools will help you to do an even better job of understanding and managing your costs.

Let’s take a closer look at these new features!

New Budgets

You can now set monthly budgets around AWS costs, customized by multiple dimensions including tags. For example, you could create budgets to track EC2, RDS, and S3 costs separately for each active development effort.

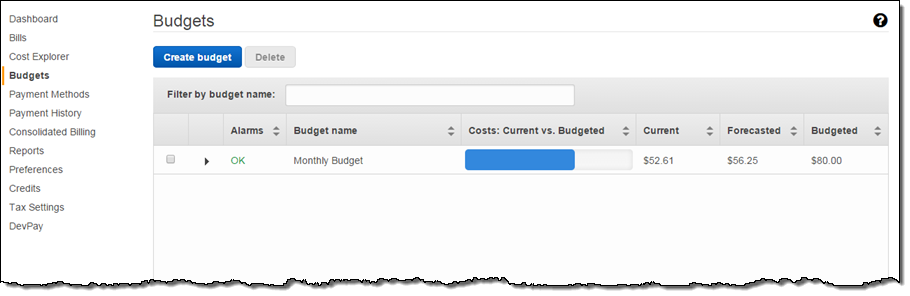

The AWS Management Console will list each of your budgets (you can also filter by name):

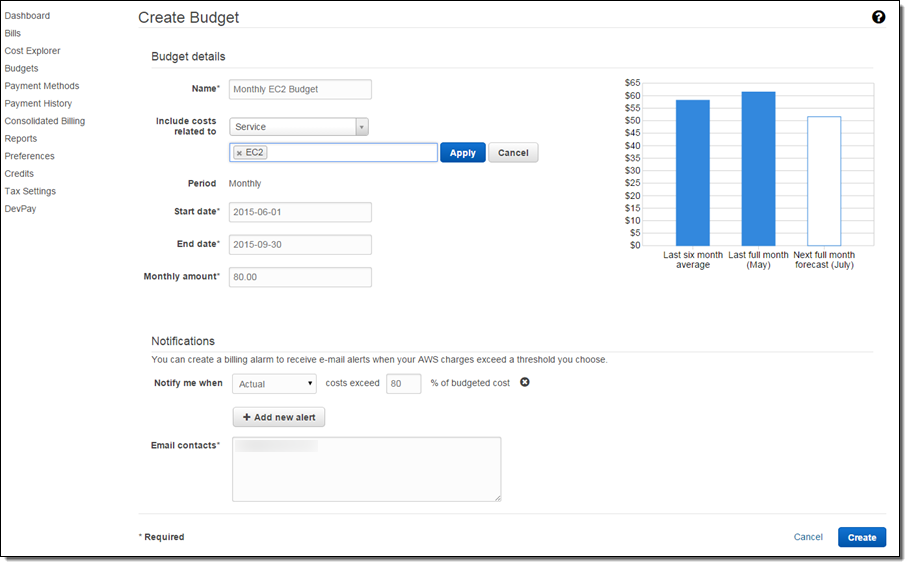

Here’s how you create a new budget. As you can see, you can choose to include costs related to any desired list of AWS services:

You can set alarms that will trigger based on actual or forecast costs, with email notification to a designated individual or group. These alarms make use of Amazon CloudWatch but are somewhat more abstract in order to better meet the needs of your business and accounting folks. You can create multiple alarms for each budget. Perhaps you want one alarm to trigger when actual costs exceed 80% of budget costs and another when forecast costs exceed budgeted costs.

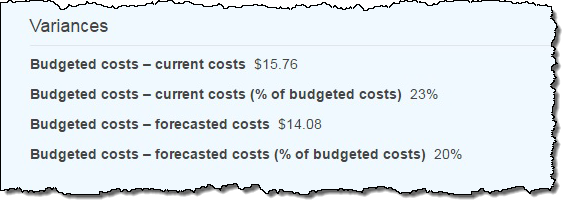

You can also view variances (budgeted vs. actual) in the console. Here’s an example:

New Forecasts

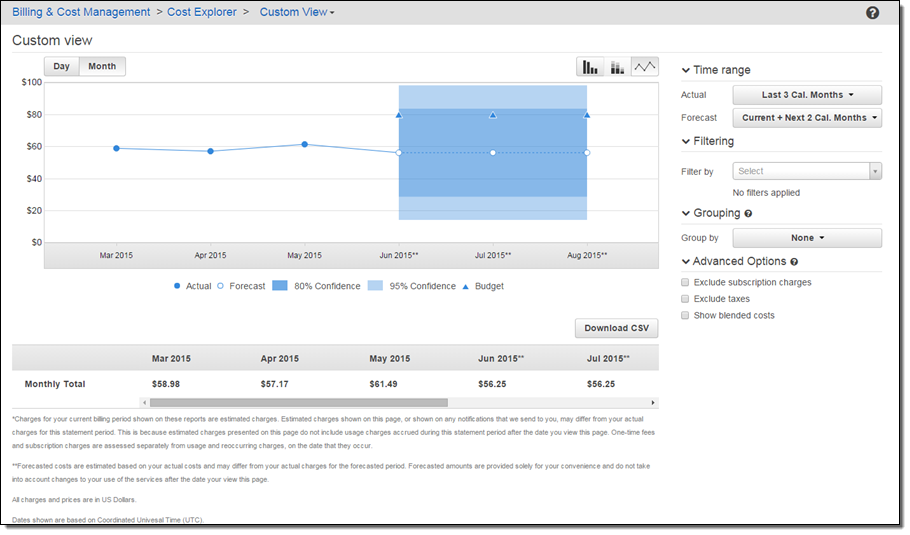

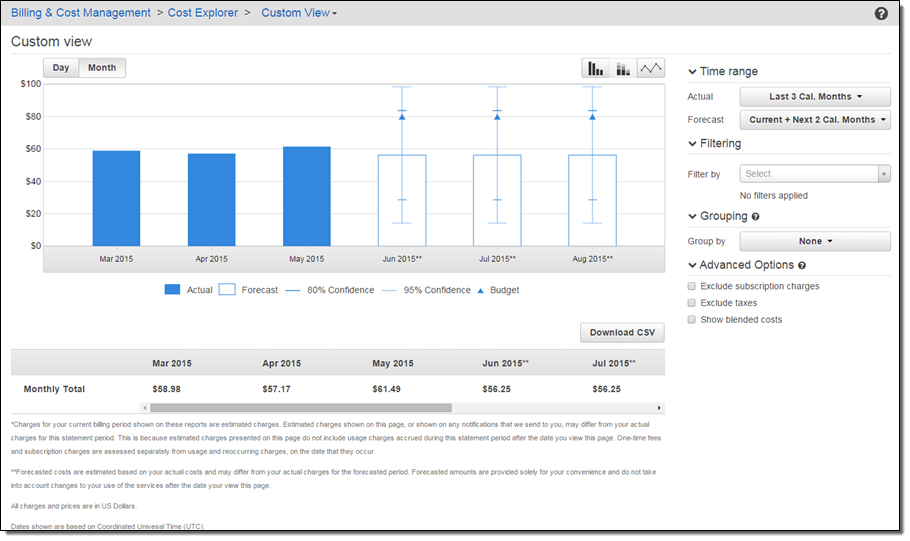

Many AWS teams use an internal algorithm to predict demand for their offerings. They use the results to help them to allocate development and operational resources, plan and execute marketing campaigns, and more. Our new budget forecasting tool makes use of the same algorithm to present you with costs estimates that include both 80% and 95% confidence interval ranges.

As is the case with budgets, you can filter forecasts on a wide variety of dimensions. You can create multiple forecasts and you can view them in the context of historical costs.

After you create a forecast, you can view it as a line chart or as a bar chart:

As you can see from the screen shots, the forecast, budget, and confident intervals are all clearly visible:

These new features are available now and you can start using them today!

— Jeff;

The

The