Sunday, July 31, 2016

Amazon Launches a Page Exclusively for Kickstarter Products

Thursday, July 28, 2016

Classic, Hand Crafted Toys for your Kids

Amazon Cognito Your User Pools – Now Generally Available

![]() A few months ago I wrote about the new Your User Pools feature for Amazon Cognito. As I wrote at the time, you can use this feature to easily add user sign-up and sign-in to your mobile and web apps. The fully managed user directories can scale to hundreds of millions of users and you can have multiple directories per AWS account. Creating a user pool takes just a few minutes and you can decide exactly which attributes (address, email, gender, phone number, and so forth, plus custom attributes) must be entered when a new user signs up for your app or service. On the security side, you can specify the desired password strength, require the use of Multi-Factor Authentication (MFA), and verify new users via phone number or email address.

A few months ago I wrote about the new Your User Pools feature for Amazon Cognito. As I wrote at the time, you can use this feature to easily add user sign-up and sign-in to your mobile and web apps. The fully managed user directories can scale to hundreds of millions of users and you can have multiple directories per AWS account. Creating a user pool takes just a few minutes and you can decide exactly which attributes (address, email, gender, phone number, and so forth, plus custom attributes) must be entered when a new user signs up for your app or service. On the security side, you can specify the desired password strength, require the use of Multi-Factor Authentication (MFA), and verify new users via phone number or email address.

Now Generally Available

We launched Your User Pools as a public beta and received lots of great feedback. Today we are making Your User Pools generally available and we are also adding a large collection of new features:

- Device Remembering – Cognito can remember the devices that each user signs in from.

- User Search – Search for users in a user pool based on an attribute.

- Customizable Email Addresses – Control the email addresses for emails to users in your user pool.

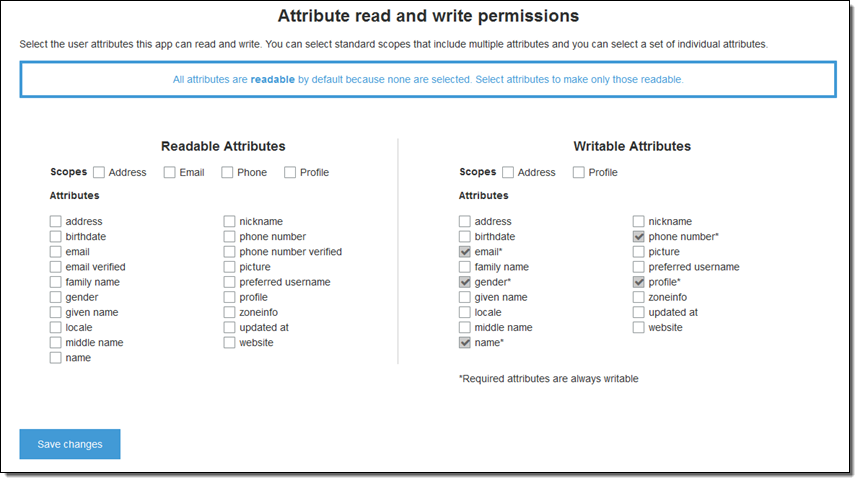

- Attribute Permissions – Set fine-grained permissions for each user attribute.

- Custom Authentication Flow – Use new APIs and Lambda triggers to customize the sign-in flow.

- Admin Sign-in – Your app can now sign in users from backend servers or Lambda functions.

- Global Sign-out – Allow a user to sign out from all signed-in devices or browsers.

- Custom Expiration Period – Set an expiration period for refresh tokens.

- API Gateway Integration – Use user pool to authorize Amazon API Gateway requests.

- New Regions – Cognito Your User Pools are now available in additional AWS Regions.

Let's take a closer look at each of these new features!

Device Remembering

Cognito can now remember the set of devices used by (signed in from) each user. You, as the creator of the user pool, have the option to allow your users to request this behavior. If you have enabled MFA for a user pool, you can also choose to eliminate the need for entry of an MFA code on a device that has been remembered. This simplifies and streamlines the login process on a remembered device, while still requiring entry of an MFA code for unrecognized devices. You can also list a user's devices and allow them to sign out from a device remotely.

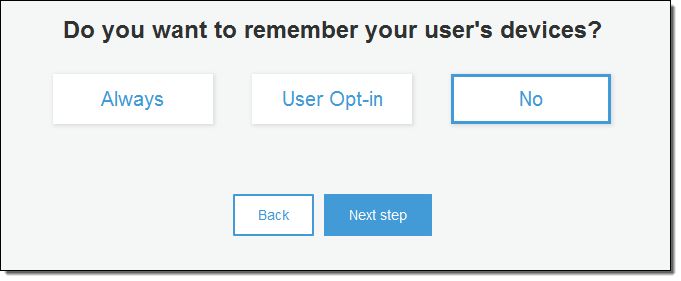

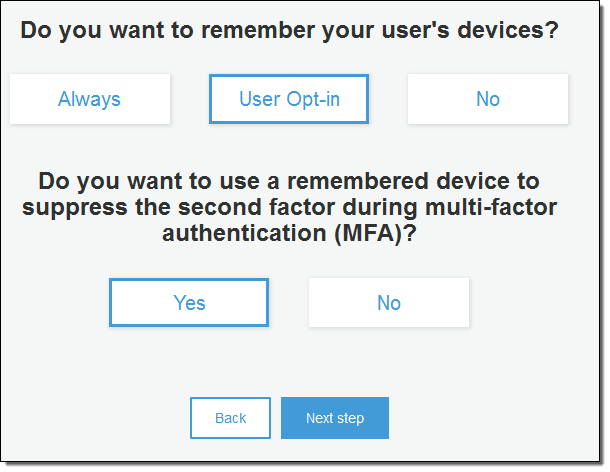

You can enable and customize this feature when you create a new user pool; you can also set it up for an existing pool. Here's how you enable and customize it when you create a new user pool. First you enable the feature by clicking on Always or User Opt-in:

Then you indicate whether you would like to suppress MFA on remembered devices:

The AWS Mobile SDKs for iOS, Android, and JavaScript contain new methods that you can call from your app to remember devices.

User Search

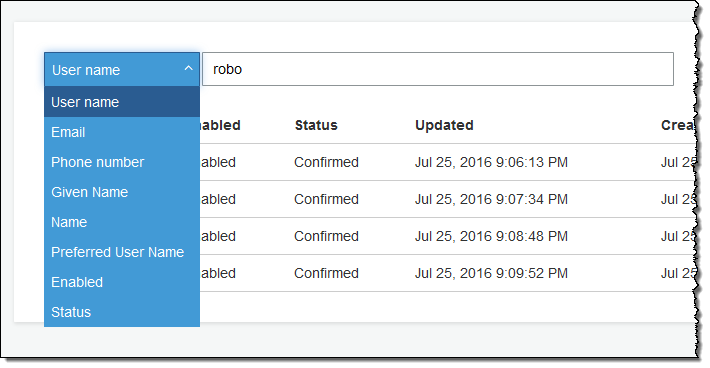

You, as the creator of a Your User Pool, can now search for users based on a user attribute such as username, given_name, family_name, name, preferred_user_name, email, phone_number, status, or user_status.

You can do a full match or a prefix match using the AWS Management Console, the ListUsers API function, or the list-users command line tool. Here's a Console-powered search:

Customizable Email Addresses

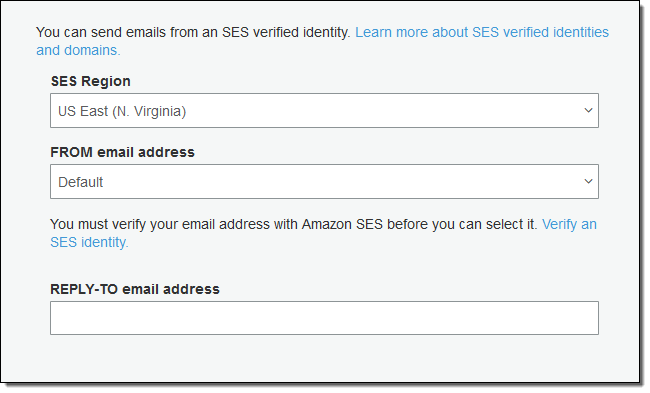

You can now specify the From and the Reply-To email addresses that are used to communicate with your users. Here's how you specify the addresses when you create a new pool:

You will need to verify the From address with Amazon Simple Email Service (SES) before you can use it (read Verifying Email Addresses in Amazon SES to learn more).

Attribute Permissions

You can now set per-app read and write permissions for each user attribute. This gives you the ability to control which applications can see and/or modify each of the attributes that are stored for your users. For example, you could have a custom attribute that indicates whether a user is a paying customer or not. Your apps could see this attribute but could not modify it directly. Instead, you would update this attribute using an administrative tool or a background process. Permissions for user attributes can be set from the Console, the API, or the CLI.

Custom Authentication Flow

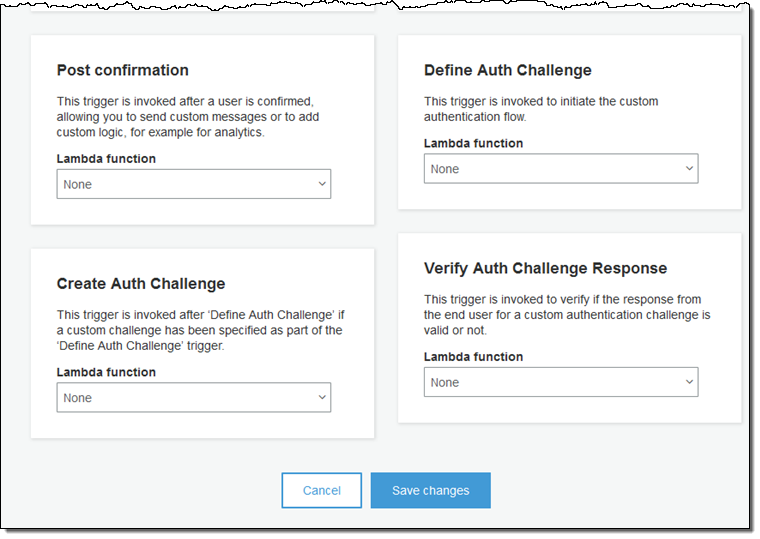

You can now use a pair of new API functions (InitiateAuth and RespondToAuthChallenge) and three new Lambda triggers to create your own sign-in flow or to customize the existing one. You can, for example, customize the user flows for users with different levels of experience, different locations, or different security requirements. You could require the use of a CAPTCHA for some users or for all users, as your needs dictate.

The new Lambda triggers are:

Define Auth Challenge – Invoked to initiate the custom authentication flow.

Create Auth Challenge – Invoked if a custom authentication challenge has been defined.

Verify Auth Challenge Response – Invoked to check the validity of a custom authentication challenge.

You can set up the triggers from the Console like this:

Global Sign-out

You can now give your users the option to sign out (by invalidating tokens) of all of the devices where they had been signed in. Apps can call the [GlobalSignOut] function using a valid, non-expired, non-revoked access token. Developers can remotely sign out any user by calling the [AdminUserGlobalSignOut] function using a Pool ID and a username.

Custom Expiration Period

Cognito sign-in makes use of “refresh” tokens to eliminate the need to sign in every time an application is opened. By default, the token expires after 30 days. In order to give you more control over the balance between security and convenience, you can now set a custom expiration period for the refresh tokens generated by each of your user pools.

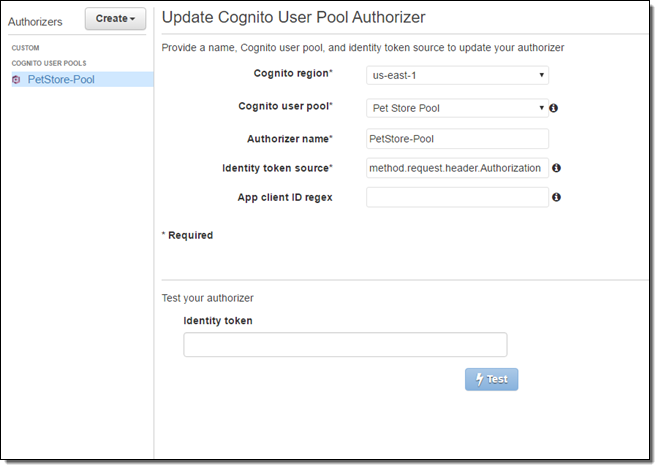

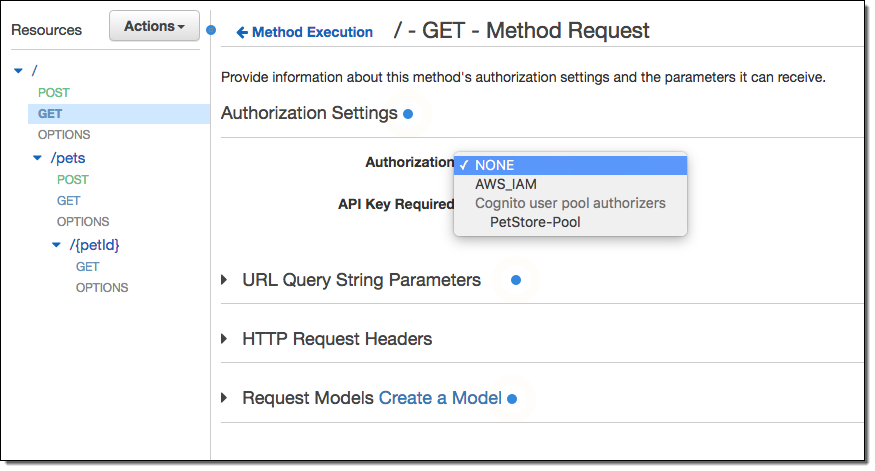

API Gateway Integration

Cognito user pools can now work hand-in-hand with Amazon API Gateway to authorize API requests. You can configure API Gateway to accept Id tokens to authorize users based on their presence in a user pool.

To do this, you first create a Cognito User Pool Authorizer using the API Gateway Console, referencing the user pool and choosing the request header that will contain the identity token:

Navigate to the desired method and select the new Authorizer:

New Regions

As part of today's launch we are making Cognito available in the US West (Oregon) Region.

In addition to the existing availability in the US East (Northern Virginia) Region, we are making Your User Pools available in the Europe (Ireland), US West (Oregon), and Asia Pacific (Tokyo) Regions.

Available Now

These new features are available now and you can start using them today!

Jeff;

AWS Application Discovery Service Update – Agentless Discovery for VMware

As I wrote earlier this year, AWS Application Discovery Service is designed to help you to dig in to your existing environment, identify what's going on, and provide you with the information and visibility that you need to have in order to successfully migrate your systems and applications to the cloud (see my post, New – AWS Application Discovery Service – Plan Your Cloud Migration, for more information).

The discovery process described in my blog post makes use of a small, lightweight agent that runs on each existing host. The agent quietly and unobtrusively collects relevant system information, stores it locally for review, and then uploads it to Application Discovery Service across a secure connection on port 443. The information is processed, correlated, and stored in an encrypted repository that is protected by AWS Key Management Service (KMS).

In virtualized environments, installing the agent on each guest operating system may be impractical for logistical or other reasons. Although the agent runs on a fairly broad spectrum of Windows releases and Linux distributions, there's always a chance that you still have older releases of Windows or exotic distributions of Linux in the mix.

New Agentless Discovery

In order to bring the benefits of AWS Application Discovery Service to even more AWS customers, we are introducing a new, agentless discovery option today.

If you have virtual machines (VMs) that are running in the VMware vCenter environment, you can use this new option to collect relevant system information without installing an agent on each guest. Instead, you load an on-premises appliance into vCenter and allow it to discover the guest VMs therein.

The vCenter appliance captures system performance information and resource utilization for each VM, regardless of what operating system is in use. However, it cannot “look inside” of the VM and as such cannot figure out what software is installed or what network dependencies exist. If you need to take a closer look at some of your existing VMs in order to plan your migration, you can install the Application Discovery agent on an as-needed basis.

Like the agent-based model, agentless discovery gathers information and stores it locally so that you can review it before it is sent to Application Discovery Service.

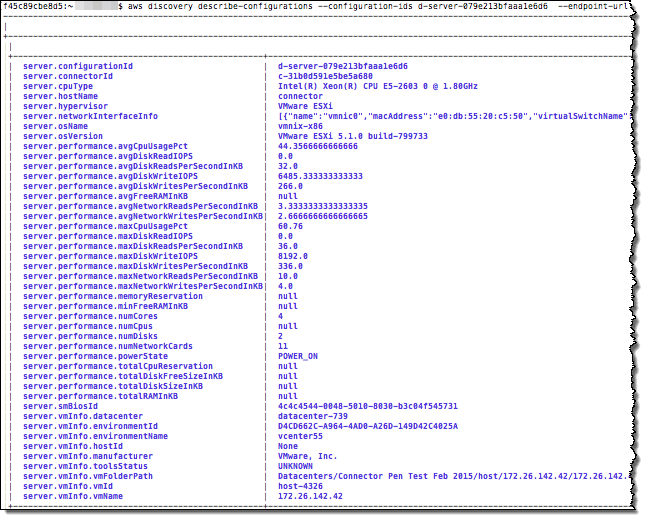

After the information has been uploaded, you can explore it using the AWS Command Line Interface (CLI). For example, you can use the describe-configurations command to learn more about the configuration of a particular guest:

You can also export the discovered data in CSV form and then use it to plan your migration. To learn more about this feature, read about the export-configurations command.

Getting Started with Agentless Discovery

To get started, sign up here and we'll provide you with a link to an installer for the vCenter appliance.

Jeff;

Wednesday, July 27, 2016

No Selfie Stick, No problem! Meet Podo, the Stick-and-Shoot Camera

Tuesday, July 26, 2016

Apple Rumors: The Latest on IPhone 7 and 2nd Gen Apple Watch

Unique School Supplies at Amazon Handmade

Monday, July 25, 2016

Protect your Echo and Kindle with SquareTrade Protection Plan

How AWS Powered Amazon's Biggest Day Ever

The second annual Prime Day was another record-breaking success for Amazon, surpassing global orders compared to Black Friday, Cyber Monday and Prime Day 2015.

According to a report published by Slice Intelligence, Amazon accounted for 74% of all US consumer e-commerce on Prime Day 2016. This one-day only global shopping event, exclusively for Amazon Prime members saw record-high levels of traffic including double the number of orders on the Amazon Mobile App compared to Prime Day 2015. Members around the world purchased more than 2 million toys, more than 1 million pairs of shoes and more than 90,000 TVs in one day (see Amazon's Prime Day is the Biggest Day Ever for more stats). An event of this scale requires infrastructure that can easily scale up to match the surge in traffic.

Scaling AWS-Style

The Amazon retail site uses a fleet of EC2 instances to handle web traffic. To serve the massive increase in customer traffic for Prime Day, the Amazon retail team increased the size of their EC2 fleet, adding capacity that was equal to all of AWS and Amazon.com back in 2009. Resources were drawn from multiple AWS regions around the world.

The morning of July 11th was cool and a few morning clouds blanketed Amazon's Seattle headquarters. As 8 AM approached, the Amazon retail team was ready for the first of 10 global Prime Day launches. Across the Pacific, it was almost midnight. In Japan, mobile phones, tablets, and laptops glowed in anticipation of Prime Day deals. As traffic began to surge in Japan, CloudWatch metrics reflected the rising fleet utilization as CloudFront endpoints and ElastiCache nodes lit up with high-velocity mobile and web requests. This wave of traffic then circled the globe, arriving in Europe and the US over the course of 40 hours and generating 85 billion clickstream log entries. Orders surpassed Prime Day 2015 by more than 60% worldwide and more than 50% in the US alone. On the mobile side, more than one million customers downloaded and used the Amazon Mobile App for the first time.

As part of Prime Day, Amazon.com saw a significant uptick in their use of 38 different AWS services including:

- Analytics – Amazon Redshift, Amazon Machine Learning.

- Application Services – Amazon API Gateway, CloudSearch, Data Pipeline, Elastic Transcoder, SES, SNS, SQS, SWF.

- Compute – EC2, Auto Scaling, EBS, EMR, Lambda.

- Database – DynamoDB, ElastiCache, Kinesis, Kinesis Firehose, RDS.

- Management Tools – CloudTrail, CloudWatch, Trusted Advisor.

- Mobile – Mobile Analytics.

- Networking –Direct Connect, Directory Service, Virtual Private Cloud, Route 53.

- Security & Identity – CloudHSM, IAM, KMS.

- Storage & Content Delivery – CloudFront, S3, Amazon Glacier.

To further illustrate the scale of Prime Day and the opportunity for other AWS customers to host similar large-scale, single-day events, let's look at Prime Day through the lens of several AWS services:

- Amazon Mobile Analytics events increased 1,661% compared to the same day the previous week.

- Amazon's use of CloudWatch metrics increased 400% worldwide on Prime Day, compared to the same day the previous week.

- DynamoDB served over 56 billion extra requests worldwide on Prime Day compared to the same day the previous week.

Running on AWS

The AWS team treats Amazon.com just like any of our other big customers. The two organizations are business partners and communicate through defined support plans and channels. Sticking to this somewhat formal discipline helps the AWS team to improve the support plans and the communication processes for all AWS customers.

Running the Amazon website and mobile app on AWS makes short-term, large scale global events like Prime Day technically feasible and economically viable. When I joined Amazon.com back in 2002 (before the site moved to AWS), preparation for the holiday shopping season involved a lot of planning, budgeting, and expensive hardware acquisition. This hardware helped to accommodate the increased traffic, but the acquisition process meant that Amazon.com sat on unused and lightly utilized hardware after the traffic subsided. AWS enables customers to add the capacity required to power big events like Prime Day, and enables this capacity to be acquired in a much more elastic, cost-effective manner. All of the undifferentiated heavy lifting required to create an online event at this scale is now handled by AWS so the Amazon retail team can focus on delivering the best possible experience for its customers.

Lessons Learned

The Amazon retail team was happy that Prime Day was over, and ready for some rest, but they shared some of what they learned with me:

- Prepare – Planning and testing are essential. Use historical metrics to help forecast and model future traffic, and to estimate your resource needs accordingly. Prepare for failures with GameDay exercises – intentionally breaking various parts of the infrastructure and the site in order to simulate several failure scenarios (read Resilience Engineering – Learning to Embrace Failure to learn more about GameDay exercises at Amazon).

- Automate – Reduce manual efforts and automate everything. Take advantage of services that can scale automatically in response to demand – Route53 to automatically scale your DNS, Auto Scaling to scale your EC2 capacity according to demand, and Elastic Load Balancing for automatic failover and to balance traffic across multiple regions and availability zones (AZs).

- Monitor – Use Amazon CloudWatch metrics and alarms liberally. CloudWatch monitoring helps you stay on top of your usage to ensure the best experience for your customers.

- Think Big – Using AWS gave the team the resources to create another holiday season. Confidence in your infrastructure is what enables you to scale your big events.

As I mentioned before, nothing is stopping you from envisioning and implementing an event of this scale and scope!

I would encourage you to think big, and to make good use of our support plans and services. Our Solutions Architects and Technical Account Managers are ready to help, as are our APN Consulting Partners. If you are planning for a large-scale one-time event, give us a heads-up and we'll work with you before and during the event.

-Jeff;

PS – What did you buy on Prime Day?

Sunday, July 24, 2016

Must-Have Sun Care Products this Summer

Thursday, July 21, 2016

Discounted Student Loans for Prime Members?

AWS Webinars – July, 2016

We have some awesome webinars lined up for next week! As always, they are free but do often fill up, so go and and register. Here's the lineup (all times are PT and each webinar runs for one hour):

July 26

- 9:00 AM – Getting Started with AWS.

- Noon – SQL to NoSQL: Best Practices with Amazon DynamoDB.

July 27

- 9:00 AM – Mobile App Testing with AWS Device Farm.

- 10:30 AM – Amazon EC2 Masterclass.

- Noon – Getting Started with IoT.

July 28

- 9:00 AM – Intro to Elastic File System.

- 10:30 AM – Getting Started with Amazon Redshift.

- Noon – Running fast, interactive queries on petabyte datasets using Presto.

July 29

-

Jeff;

EC2 Run Command Update – Monitor Execution Using Notifications

We launched EC2 Run Command late last year and have enjoyed seeing our customers put it to use in their cloud and on-premises environments. After the launch, we quickly added Support for Linux Instances, the power to Manage & Share Commands, and the ability to do Hybrid & Cross-Cloud Management. Earlier today we made EC2 Run Command available in the China (Beijing) and Asia Pacific (Seoul) Regions.

Our customers are using EC2 Run Command to automate and encapsulate routine system administration tasks. They are creating local users and groups, scanning for and then installing applicable Windows updates, managing services, checking log files, and the like. Because these customers are using EC2 Run Command as a building block, they have told us that they would like to have better visibility into the actual command execution process. They would like to know, quickly and often in detail, when each command and each code block in the command begins executing, when it completes, and how it completed (successfully or unsuccessfully).

In order to support this really important use case, you can now arrange to be notified when the status of a command or a code block within a command changes. In order to provide you with several different integration options, you can receive notifications via CloudWatch Events or via Amazon Simple Notification Service (SNS).

These notifications will allow you to use EC2 Run Command in true building block fashion. You can programmatically invoke commands and then process the results as they arrive. For example, you could create and run a command that captures the contents of important system files and metrics on each instance. When the command is run, EC2 Run Command will save the output in S3. Your notification handler can retrieve the object from S3, scan it for items of interest or concern, and then raise an alert if something appears to be amiss.

Monitoring Executing Using Amazon SNS

Let's run up a command on some EC2 instances and monitor the progress using SNS.

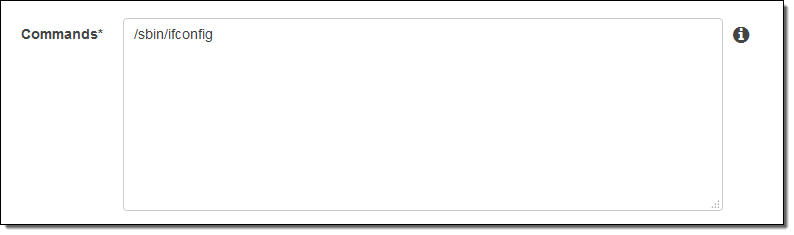

Following the directions (Monitoring Commands), I created an S3 bucket (jbarr-run-output), an SNS topic (command-status), and an IAM role (RunCommandNotifySNS) that allows the on-instance agent to send notifications on my behalf. I also subscribed my email address to the SNS topic, and entered the command:

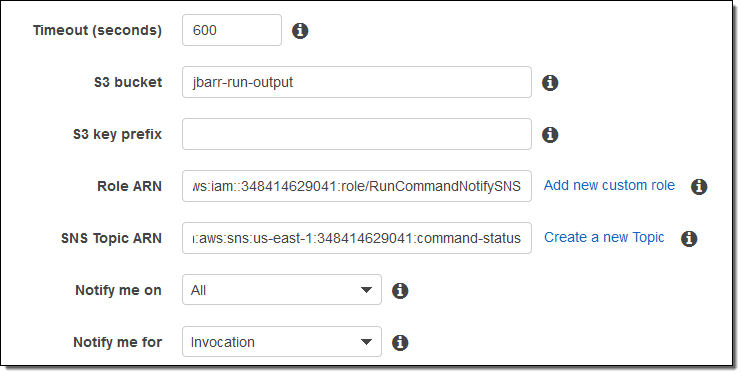

And specified the bucket, topic, and role (further down on the Run a command page):

I chose All so that I would be notified of every possible status change (In Progress, Success, Timed Out, Cancelled, and Failed) and Invocation so that I would receive notifications as the status of each instance chances. I could have chosen to receive notifications at the command level (representing all of the instances) by selecting Command instead of Invocation.

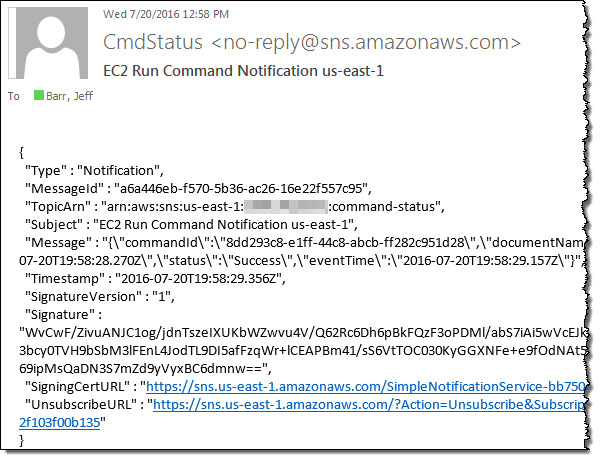

I clicked on Run and received a sequence of emails as the commands were executed on each of the instances that I selected. Here's a sample:

In a real-world environment you would receive and process these notifications programmatically.

Monitoring Execution Using CloudWatch Events

I can also monitor the execution of my commands using CloudWatch Events. I can send the notifications to an AWS Lambda functioon, an SQS queue, or a Amazon Kinesis stream.

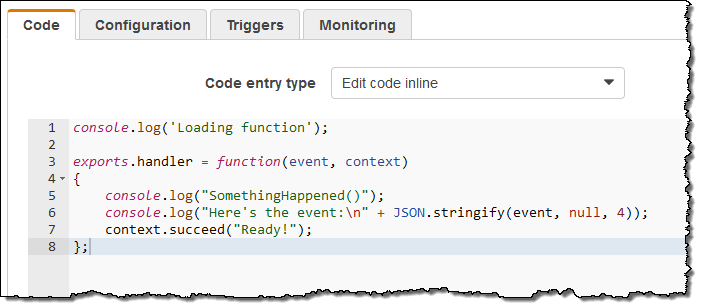

For illustrative purposes, I used a very simple Lambda function:

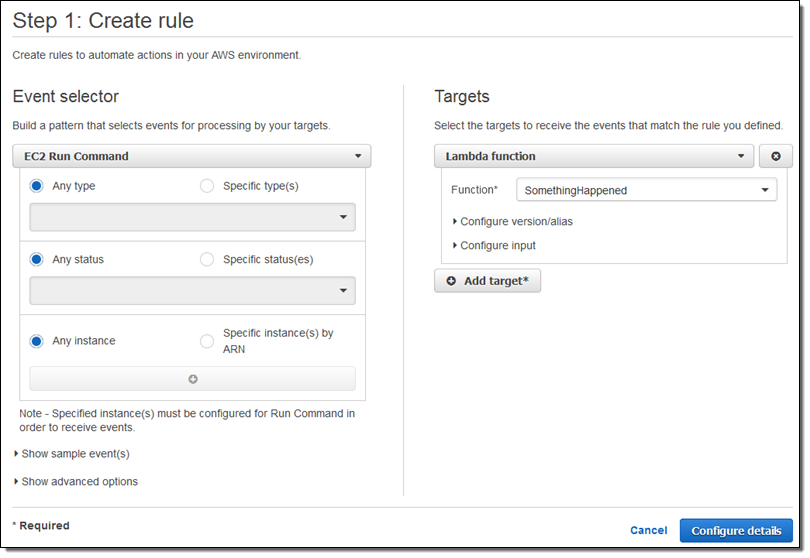

I created a rule that would invoke the function for all notifications issued by the Run Command (as you can see below, I could have been more specific if necessary):

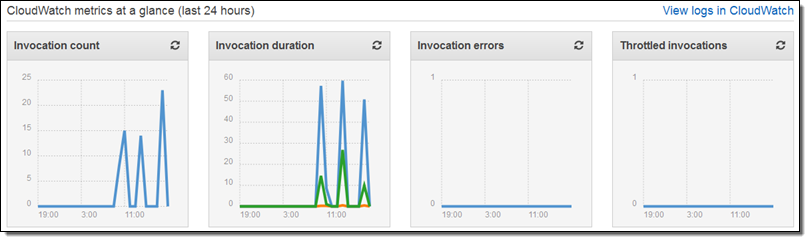

I saved the rule and ran another command, and then checked the CloudWatch metrics a few seconds later:

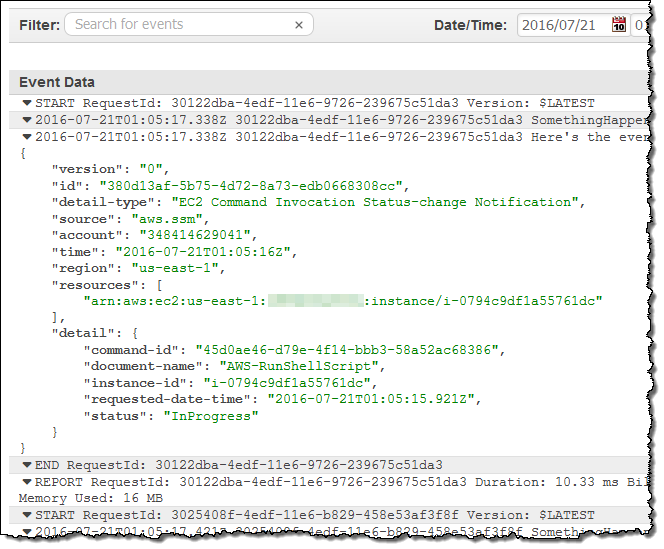

I also checked the CloudWatch log and inspected the output from my code:

Available Now

This feature is available now and you can start using it today.

Monitoring via SNS is available in all AWS Regions except Asia Pacific (Mumbai) and AWS GovCloud (US). Monitoring via CloudWatch Events is available in all AWS Regions except Asia Pacific (Mumbai), China (Beijing), and AWS GovCloud (US).

-Jeff;

Wednesday, July 20, 2016

Is Amazon Echo being Wiretapped by the FBI?

Amazon Aurora Update – Create Cluster from MySQL Backup

After potential AWS customers see the benefits of moving to the cloud, they often ask about the best way to migrate their applications and their data, including large amounts of structured information stored in relational databases.

Today we are launching an important new feature for Amazon Aurora. If you are already making use of MySQL, either on-premises or on an Amazon EC2 instance, you can now create a snapshot backup of your existing database, upload it to Amazon S3, and use it to create an Amazon Aurora cluster. In conjunction with Amazon Aurora's existing ability to replicate data from an existing MySQL database, you can easily migrate from MySQL to Amazon Aurora while keeping your application up and running.

This feature can be used to easily and efficiently migrate large (2 TB and more) MySQL databases to Amazon Aurora with minimal performance impact on the source database. Our testing has shown that this process can be up to 20 times faster than using the traditional mysqldump utility. The database can contain both InnoDB and MyISAM tables; however, we do encourage you to migrate from MyISAM to InnoDB where possible.

Here's an outline of the migration process:

- Source Database Preparation – Enable binary logging in the source MySQL database and ensure that the logs will be retained for the duration of the migration.

- Source Database Backup – Use Percona's Xtrabackup tool to create a “hot” backup of the source database. This tool does not lock database tables or rows, does not block transactions, and produces compressed backups. You can direct the tool to create one backup file or multiple smaller files; Amazon Aurora can accommodate either option.

- S3 Upload – Upload the backup to S3. For backups of 5 TB or less, a direct upload via the AWS Management Console or the AWS Command Line Interface (CLI) is generally sufficient. For larger backups, consider using AWS Import/Export Snowball.

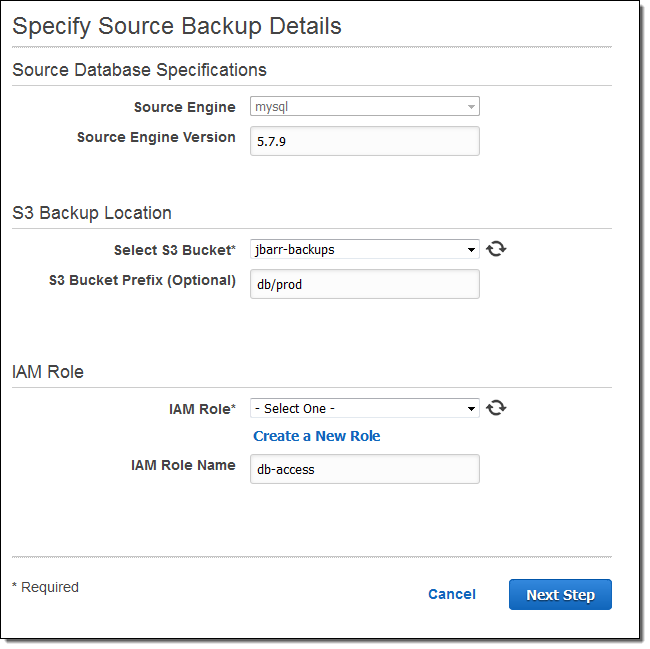

- IAM Role – Create an IAM role that allows Amazon Relational Database Service (RDS) to access the uploaded backup and the bucket it resides within. The role must allow RDS to perform the

ListBucketandGetBucketLocationoperations on the bucket and theGetObjectoperation on the backup (you can find a sample policy in the documentation). - Create Cluster – Create a new Amazon Aurora cluster from the uploaded backup. Click on Restore Aurora DB Cluster from S3 in the RDS Console, enter the version number of the source database, point to the S3 bucket and choose the IAM role, then click on Next Step. Proceed through the remainder of the cluster creation pages (Specify DB Details and Configure Advanced Settings) in the usual way:

Amazon Aurora will process the backup files in alphabetical order.

- Migrate MySQL Schema – Migrate (as appropriate) the users, permissions, and configuration settings in the MySQL INFORMATION_SCHEMA.

- Migrate Related Items – Migrate the triggers, functions, and stored procedures from the source database to the new Amazon Aurora cluster.

- Initiate Replication – Begin replication from the source database to the new Amazon Aurora cluster and wait for the cluster to catch up.

- Switch to Cluster – Point all client applications at the Amazon Aurora cluster.

- Terminate Replication – End replication to the Amazon Aurora cluster.

Given the mission-critical nature of a production-level relational database, a dry run is always a good idea!

Available Now

This feature is available now and you can start using it today in all public AWS regions with the exception of Asia Pacific (Mumbai). To learn more, read Migrating Data from an External MySQL Database to an Amazon Aurora DB Cluster in the Amazon Aurora User Guide.

Jeff;