Building world-class games is a very difficult, time-consuming, and expensive process. The audience is incredibly demanding. They want engaging, social play that spans a wide variety of desktop, console, and mobile platforms. Due to the long lead time inherent in the game development and distribution process, the success or failure of the game can often be determined on launch day, when pent-up demand causes hundreds of thousands or even millions of players to sign in and take the game for a spin.

Behind the scenes, the development process must be up to this challenge. Game creators must be part of a team that includes developers with skills in story telling, game design, physics, logic design, sound creation, graphics, visual effects, and animation. If the game is network-based, the team must also include expertise in scaling, online storage, network communication & management, security.

With development and creative work that can take 18 to 36 months, today's games represent a considerable financial and reputational risk for the studio. Each new game is a make-or-break affair.

New AWS Game Services

Today I would like to tell you about a pair of new AWS products that are designed for use by professional game developers building cloud-connected, cross-platform games. We started with several proven, industry leading engines and developer tools, added a considerable amount of our own code, and integrated the entire package with our Twitch video platform and community, while also mixing in access to relevant AWS messaging, identity, and storage services. Here's what we are announcing today:

Lumberyard -  A game engine and development environment designed for professional developers. A blend of new and proven technologies from CryEngine, Double Helix, and AWS, Lumberyard simplifies and streamlines game development. As a game engine, it supports development of cloud-connected and standalone 3D games, with support for asset management, character creation, AI, physics, audio, and more. On the development side, the Lumberyard IDE allows you to design indoor and outdoor environments, starting from a blank canvas. You (I just promoted you to professional game developer) can take advantage of built-in content workflows and an asset pipeline, editing game assets in Photoshop, Maya, or 3ds Max for editing and bringing them in to the IDE afterward. You can program your game in the traditional way using C++ and Visual Studio (including access to the AWS SDK for C++) or you can use our Flow Graph tool and the cool new Cloud Canvas to create cloud-connected gameplay features using visual scripting.

A game engine and development environment designed for professional developers. A blend of new and proven technologies from CryEngine, Double Helix, and AWS, Lumberyard simplifies and streamlines game development. As a game engine, it supports development of cloud-connected and standalone 3D games, with support for asset management, character creation, AI, physics, audio, and more. On the development side, the Lumberyard IDE allows you to design indoor and outdoor environments, starting from a blank canvas. You (I just promoted you to professional game developer) can take advantage of built-in content workflows and an asset pipeline, editing game assets in Photoshop, Maya, or 3ds Max for editing and bringing them in to the IDE afterward. You can program your game in the traditional way using C++ and Visual Studio (including access to the AWS SDK for C++) or you can use our Flow Graph tool and the cool new Cloud Canvas to create cloud-connected gameplay features using visual scripting.

Amazon GameLift -  Many modern games include a server or backend component that must scale in proportion to the number of active sessions. Amazon GameLift will help you to deploy and scale session-based multiplayer game servers for the games that you build using Lumberyard. You simply upload your game server image to AWS and deploy the image into a fleet of EC2 instances that scales up as players connect and play. You don't need to invest in building, scaling, running, or monitoring your own fleet of servers. Instead, you pay a small fee per daily active user (DAU) and the usual EC2 On-Demand rates for the compute capacity, EBS storage, and bandwidth that your users consume.

Many modern games include a server or backend component that must scale in proportion to the number of active sessions. Amazon GameLift will help you to deploy and scale session-based multiplayer game servers for the games that you build using Lumberyard. You simply upload your game server image to AWS and deploy the image into a fleet of EC2 instances that scales up as players connect and play. You don't need to invest in building, scaling, running, or monitoring your own fleet of servers. Instead, you pay a small fee per daily active user (DAU) and the usual EC2 On-Demand rates for the compute capacity, EBS storage, and bandwidth that your users consume.

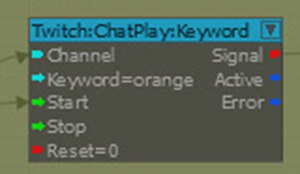

Twitch Integration -  Modern gamers are a very connected bunch. When they are not playing themselves, they like to connect and interact with other players and gaming enthusiasts on Twitch. Professional and amateur players display their talents on Twitch and create large, loyal fan bases. In order to take this trend even further and to foster the establishment of deeper connections and stronger communities, games built with Lumberyard will be able to take advantage of two new Twitch integration features. Twitch ChatPlay allows you to build games that respond to keywords in a Twitch chat stream. For example, the audience can vote to have the player take the most desired course of action. Twitch JoinIn allows a broadcaster to invite a member of the audience into to the game from within the chat channel.

Modern gamers are a very connected bunch. When they are not playing themselves, they like to connect and interact with other players and gaming enthusiasts on Twitch. Professional and amateur players display their talents on Twitch and create large, loyal fan bases. In order to take this trend even further and to foster the establishment of deeper connections and stronger communities, games built with Lumberyard will be able to take advantage of two new Twitch integration features. Twitch ChatPlay allows you to build games that respond to keywords in a Twitch chat stream. For example, the audience can vote to have the player take the most desired course of action. Twitch JoinIn allows a broadcaster to invite a member of the audience into to the game from within the chat channel.

These services, like many other parts of AWS, are designed to allow you to focus on the unique and creative aspects of your game, with an emphasis on rapid turnaround and easy iteration so that you can continue to hone your gameplay until it reaches the desired level of engagement and fun.

Support Services - As the icing on this cake, we are also launching a range of support options including a dedicated Lumberyard forum and a set of tutorials (both text and video). Multiple tiers of paid AWS support are also available.

Developing with Lumberyard

Lumberyard is at the heart of today's announcement. As I mentioned earlier, it is designed for professional developers and supports development of high-quality, cross-platform games. We are launching with support for the following environments:

- Windows - Vista, Windows 7, 8, and 10.

- Console - PlayStation 4 and Xbox One.

Support for mobile devices and VR headsets is in the works and should be available within a couple of months.

The Lumberyard development environment runs on your Windows PC or laptop. You'll need a fast, quad-core processor, at least 8 GB of memory, 200 GB of free disk space, and a high-end video card with 2 GB or more of memory and Direct X 11 compatibility. You will also need Visual Studio 2013 Update 4 (or newer) and the Visual C++ Redistributables package for Visual Studio 2013.

The Lumberyard Zip file contains the binaries, templates, assets, and configuration files for the Lumberyard Editor. It also includes binaries and source code for the Lumberyard game engine. You can use the engine as-is, you can dig in to the source code for reference purposes, or you can customize it in order to further differentiate your game. The Zip file also contains the Lumberyard Launcher. This program makes sure that you have properly installed and configured Lumberyard and the third party runtimes, SDKs, tools, and plugins.

The Lumberyard Editor encapsulates the game under development and a suite of tools that you can use to edit the game's assets.

The Lumberyard Editor includes a suite of editing tools (each of which could be the subject of an entire blog post) including an Asset Browser, a Layer Editor, a LOD Generator, a Texture Browser, a Material Editor, Geppetto (character and animation tools), a Mannequin Editor, Flow Graph (visual programming), an AI Debugger, a Track View Editor, an Audio Controls Editor, a Terrain Editor, a Terrain Texture Layers Editor, a Particle Editor, a Time of Day Editor, a Sun Trajectory Tool, a Composition Editor, a Database View, and a UI Editor. All of the editors (and much more) are accessible from one of the toolbars at the top.

In order to allow you to add functionality to your game in a selective, modular form, Lumberyard uses a code packaging system that we call Gems. You simply enable the desired Gems and they'll be built and included in your finished game binary automatically. Lumberyard includes Gems for AWS access, Boids (for flocking behavior), clouds, game effects, access to GameLift, lightning, physics, rain, snow, tornadoes, user interfaces, multiplayer functions, and a collection of woodlands assets (for detailed, realistic forests).

Coding with Flow Graph and Cloud Canvas

Traditionally, logic for games was built by dedicated developers, often in C++ and with the usual turnaround time for an edit/compile/run cycle. While this option is still open to you if you use Lumberyard, you also have two other options: Lua and Flow Graph.

Flow Graph is a modern and approachable visual scripting system that allows you to implement complex game logic without writing or or modifying any code. You can use an extensive library of pre-built nodes to set up gameplay, control sounds, and manage effects.

Flow graphs are made from nodes and links; a single level can contain multiple graphs and they can all be active at the same time. Nodes represent game entities or actions. Links connect the output of one node to the input of another one. Inputs have a type (Boolean, Float, Int, String, Vector, and so forth). Output ports can be connected to an input port of any type; an automatic type conversion is performed (if possible).

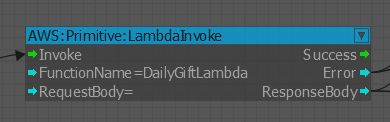

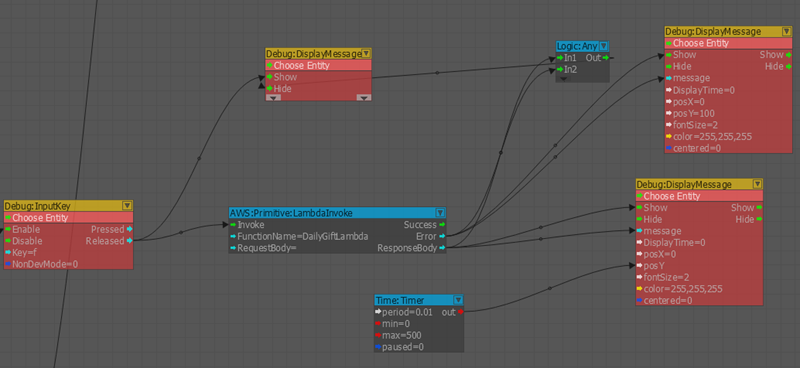

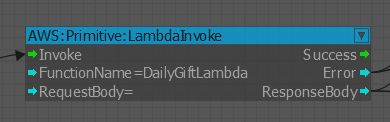

There are over 30 distinct types of nodes, including a set (known as Cloud Canvas) that provide access to various AWS services. These include two nodes that provide access to Amazon Simple Queue Service (SQS), four nodes that provide access to Amazon Simple Notification Service (SNS), seven nodes that provide read/write access to Amazon DynamoDB, one to invoke an AWS Lambda function, and another to manage player credentials using Amazon Cognito. All of the games calls to AWS are made via an AWS Identity and Access Management (IAM) user that you configure in to Cloud Canvas.

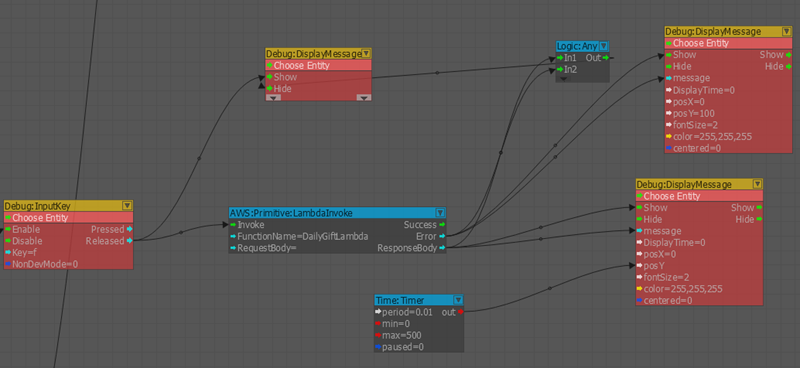

Here's a node that invokes a Lambda function named DailyGiftLambda:

Here is a flow graph that uses Lambda and DynamoDB to implement a "Daily Gift" function:

As usual, I have barely scratched the surface here! To learn more, read the Cloud Canvas documentation in the Lumberyard User Guide.

Deploying With Amazon GameLift

If your game needs a scalable, cloud-based runtime environment, you should definitely take a look at Amazon GameLift.

You can use it to host many different types of shared, connected, regularly-synchronized games including first-person shooters, survival & sandbox games, racing games, sports games, and MOBA (multiplayer Online Battlefield Arena) games.

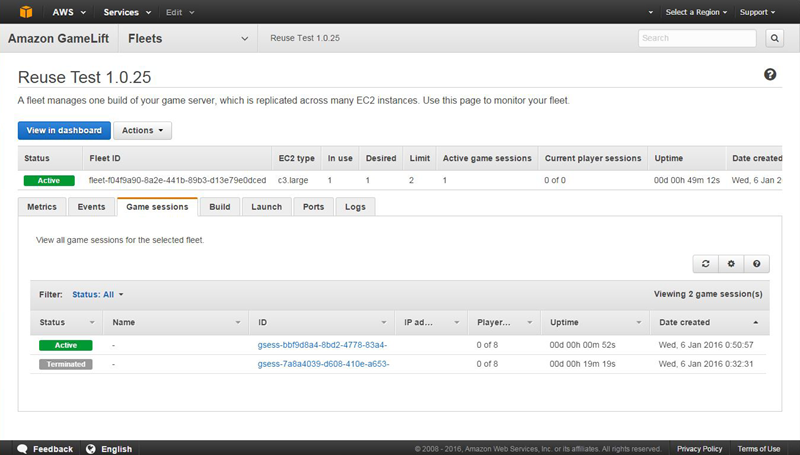

After you build your server-side logic, you simply upload it to Amazon GameLift. It will be converted to a Windows-based AMI (Amazon Machine Image) in a matter of minutes. Once the AMI is ready, you can create an Amazon GameLift fleet (or a new version of an existing one), point it at the AMI, and your backend will be ready to go.

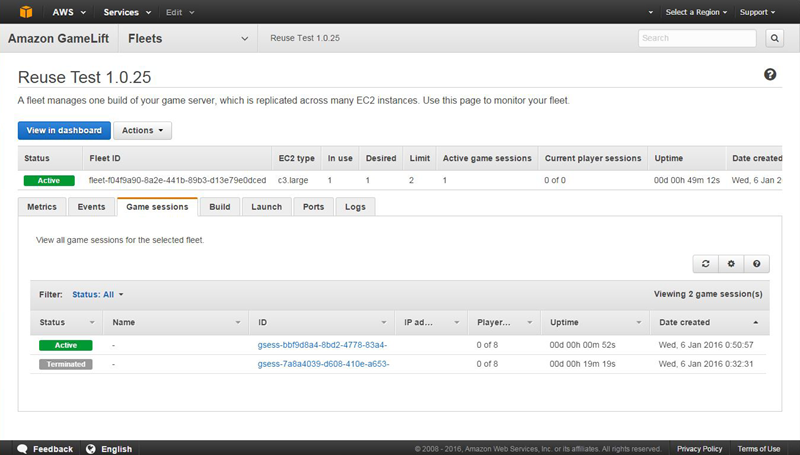

Your fleets, and the game sessions, running on each fleet, are visible in the Amazon GameLift Console:

Your Flow Graph code can use the GameLift Gem to create an Amazon GameLift session and to start the session service.

To learn more, consult the Amazon GameLift documentation.

Twitch Integration

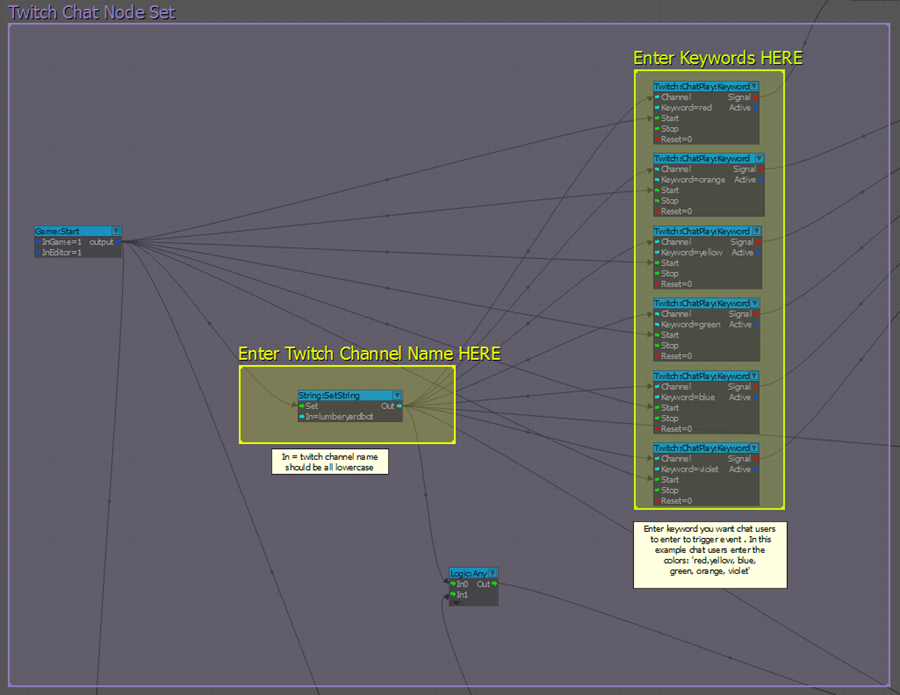

Last but definitely not least, your games can integrate with Twitch via Twitch ChatPlay and Twitch JoinIn.

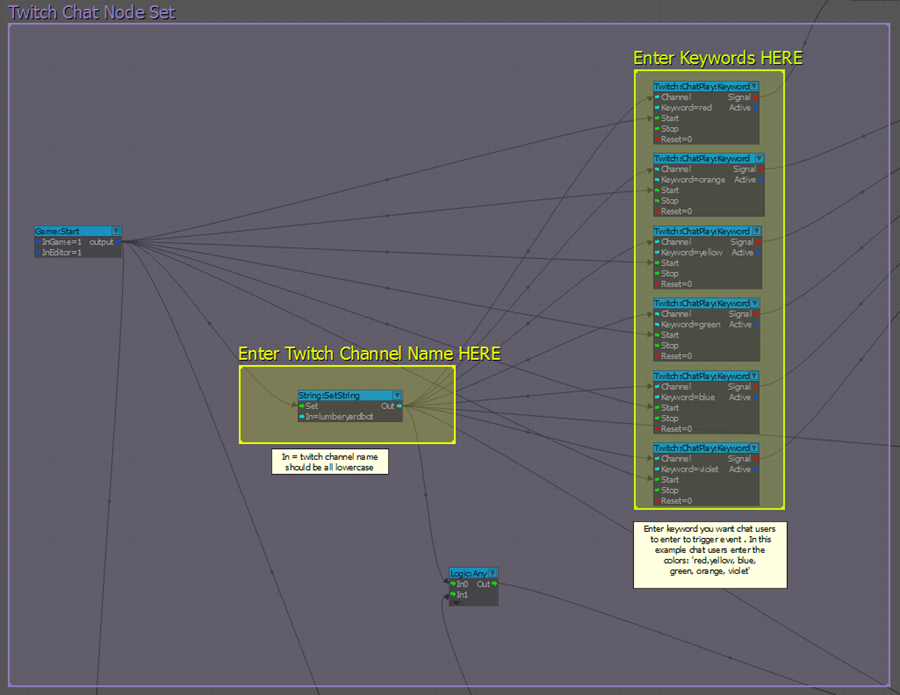

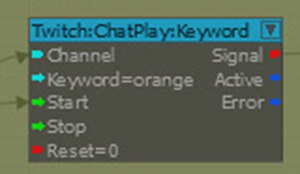

As I mentioned earlier, you can create games that react to keywords entered in a designated Twitch channel. For example, here's a Flow Graph that listens for the keywords red, yellow, blue, green, orange, and violet.

Pricing and Availability

Lumberyard and Amazon GameLift are available now and you can start building your games today!

You can build and run connected and standalone games using Lumberyard at no charge. You are responsible for the AWS charges for any calls made to AWS services using the IAM user configured in to Cloud Canvas, or through calls made using the AWS SDK for C++, along with any charges for the use of GameLift.

Amazon GameLift is launching in the US East (Northern Virginia) and US West (Oregon) regions, and will be coming to other AWS regions as well. As part of AWS Free Usage tier, you can run a fleet comprised of one c3.large instance for up to 125 hours per month for a period of one year. After that, you pay the usual On-Demand rates for the EC2 instances that you use, plus the charge for 50 GB / month of EBS storage per instance, and $1.50 per month for every 1000 daily active users.

--

Jeff;

A game engine and development environment designed for professional developers. A blend of new and proven technologies from

A game engine and development environment designed for professional developers. A blend of new and proven technologies from  Many modern games include a server or backend component that must scale in proportion to the number of active sessions. Amazon GameLift will help you to deploy and scale session-based multiplayer game servers for the games that you build using Lumberyard. You simply upload your game server image to AWS and deploy the image into a fleet of EC2 instances that scales up as players connect and play. You don't need to invest in building, scaling, running, or monitoring your own fleet of servers. Instead, you pay a small fee per daily active user (DAU) and the usual EC2 On-Demand rates for the compute capacity, EBS storage, and bandwidth that your users consume.

Many modern games include a server or backend component that must scale in proportion to the number of active sessions. Amazon GameLift will help you to deploy and scale session-based multiplayer game servers for the games that you build using Lumberyard. You simply upload your game server image to AWS and deploy the image into a fleet of EC2 instances that scales up as players connect and play. You don't need to invest in building, scaling, running, or monitoring your own fleet of servers. Instead, you pay a small fee per daily active user (DAU) and the usual EC2 On-Demand rates for the compute capacity, EBS storage, and bandwidth that your users consume. Modern gamers are a very connected bunch. When they are not playing themselves, they like to connect and interact with other players and gaming enthusiasts on Twitch. Professional and amateur players display their talents on Twitch and create large, loyal fan bases. In order to take this trend even further and to foster the establishment of deeper connections and stronger communities, games built with Lumberyard will be able to take advantage of two new Twitch integration features. Twitch ChatPlay allows you to build games that respond to keywords in a Twitch chat stream. For example, the audience can vote to have the player take the most desired course of action. Twitch JoinIn allows a broadcaster to invite a member of the audience into to the game from within the chat channel.

Modern gamers are a very connected bunch. When they are not playing themselves, they like to connect and interact with other players and gaming enthusiasts on Twitch. Professional and amateur players display their talents on Twitch and create large, loyal fan bases. In order to take this trend even further and to foster the establishment of deeper connections and stronger communities, games built with Lumberyard will be able to take advantage of two new Twitch integration features. Twitch ChatPlay allows you to build games that respond to keywords in a Twitch chat stream. For example, the audience can vote to have the player take the most desired course of action. Twitch JoinIn allows a broadcaster to invite a member of the audience into to the game from within the chat channel.