My colleague Sanjay Padhi is part of the AWS Scientific Computing team. He wrote the guest post below to share the story of how AWS provided computational resources that aided in an important scientific discovery.

-Jeff;

The Higgs boson (sometimes referred to as the God Particle), responsible for providing insight into the origin of mass, was discovered in 2012 by the world's largest experiments, ATLAS and CMS, at the Large Hadron Collider (LHC) at CERN in Geneva, Switzerland. The theorists behind this discovery were awarded the 2013 Nobel Prize in Physics.

Deep underground on the border between France and Switzerland, the LHC is the world's largest (17 miles in circumference) and highest-energy particle accelerator. It explores nature on smaller scales than any human invention has ever explored before.

From Experiment to Raw Data

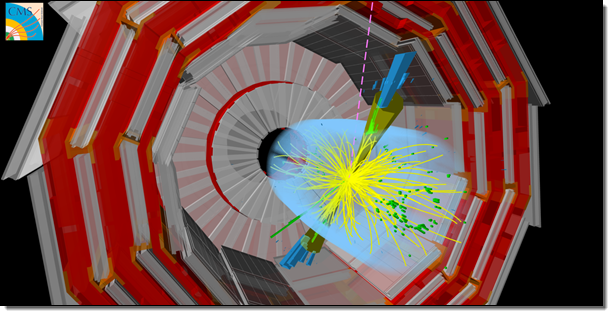

The high energy particle collisions turn mass in to energy, which then turns back in to mass, creating new particles that are observed in the CMS detector. This detector is 69 feet long, 49 feet wide and 49 feet high, and sits in a cavern 328 feet underground near the village of Cessy in France. The raw data from the CMS is recorded every 25 nanoseconds at a rate of approximately 1 petabyte per second.

After online and offline processing of the raw data at the CERN Tier 0 data center, the datasets are distributed to 7 large Tier 1 data centers across the world within 48 hours, ready for further processing and analysis by scientists (the CMS collaboration, one of the largest in the world, consists of more than 3,000 participating members from over 180 institutes and universities in 43 countries).

Processing at Fermilab

Fermilab is one of 16 National Laboratories operated by the United States Department of Energy. Located just outside Batavia Illinois, Fermilab serves as one of the Tier 1 data centers for Cern's CMS experiment.

With the increase in LHC collision energy last year, the demand for data assimilation, event simulations, and large-scale computing increased as well. With this increase came a desire to maximize cost efficiency by dynamically provisioning resources on an as-needed basis.

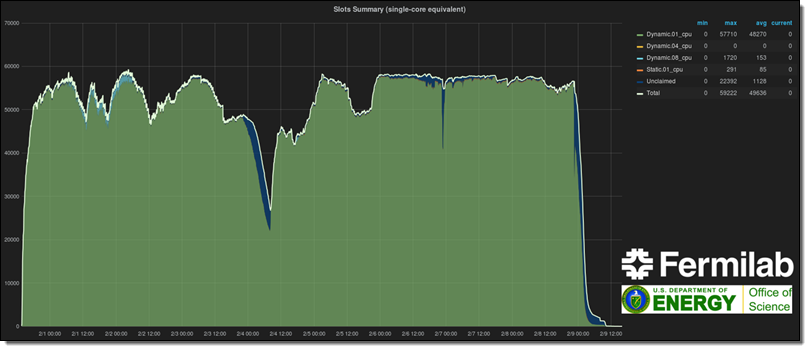

In order to address this issue, the Fermilab Scientific Computing Division launched the HEP (High Energy Physics) Cloud project in June of 2015. They planned to develop a virtual facility that would provide a common interface to access a variety of computing resources including commercial clouds. Using AWS, the HEP Cloud project successfully demonstrated the ability to add 58,000 cores elastically to their on-premises facility for the CMS experiment.

The image below depicts one of the simulations that was run on AWS. It shows how the collision of two protons creates energy that then becomes new particles.

The additional 58,000 cores represents a 4x increase in Fermilab's computational capacity, all of which is dedicated to the CMS experiment in order to generate and reconstruct Monte Carlo simulation events. More than 500 million events were fully simulated in 10 days using 2.9 million jobs. Without help from AWS, this job would have taken 6 weeks to complete using the on-premises compute resources at Fermilab.

This simulation was done in preparation for one of the major high energy physics international conferences, Recontres de Moriond. Physicists across the world will use these simulations to probe nature in detail and will share their findings with their international colleagues during the conference.

Saving Money with HEP Cloud

The HEP Cloud project aims to minimize the costs of computation. The R&D and demonstration effort was supported by an award from the AWS Cloud Credit for Research.

HEP Cloud's decision engine, the brain of the facility, has several duties. It oversees EC2 Spot Market price fluctuations using tools and techniques provided by Amazon's Spot team, initializes Amazon EC2 instances using HTCondor, tracks the DNS names of the instances using Amazon Route 53 , and makes use of AWS CloudFormation templates for infrastructure as a code.

While on the road to success, the project team had to overcome several challenges, ranging from fine-tuning configurations to optimizing their use of Amazon S3 and other resources. For example, they devised a strategy to distribute the auxiliary data across multiple AWS Regions in order to minimize storage costs and data-access latency.

Automatic Scaling into AWS

The figure below shows elastic, automatic expansion of Fermilab's Computing Facility into the AWS Cloud using Spot instances for CMS workflows. Monitoring of the resources was done using open source software provided by Grafana with custom modifications provided by the HEP Cloud.

Panagiotis Spentzouris (head of the Scientific Computing Division at Fermilab), told me:

Modern HEP experiments require massive computing resources in irregular cycles, so it is imperative for the success of our program that our computing facilities can rapidly expand and contract resources to match demand. Using commercial clouds is an important ingredient for achieving this goal, and our work with AWS on the CMS experiment's workloads though HEPCloud was a great success in demonstrating the value of this approach.

Modern HEP experiments require massive computing resources in irregular cycles, so it is imperative for the success of our program that our computing facilities can rapidly expand and contract resources to match demand. Using commercial clouds is an important ingredient for achieving this goal, and our work with AWS on the CMS experiment's workloads though HEPCloud was a great success in demonstrating the value of this approach.

I hope that you enjoyed this brief insight into the ways in which AWS is helping to explore the frontiers of physics!

- Sanjay Padhi, Ph.D, AWS Scientific Computing

No comments:

Post a Comment