Saturday, January 30, 2016

Poor Bezos…

A New Outdoor Bluetooth Speaker with Built in Microphone

Now Available: Improved Training Course for AWS Developers

My colleague Mike Stroh is part of our sales training team. He wrote the guest post below to introduce you to our newest AWS training courses.

—Jeff;

We routinely tweak our 3-day AWS technical training courses to keep pace with AWS platform updates, incorporate learner feedback, and the latest best practices.

Today I want to tell you about some exciting enhancements to Developing on AWS. Whether you’re moving applications to AWS or developing specifically for the cloud, this course can show you how to use the AWS SDK to create secure, scalable cloud applications that tap the full power of the platform.

What’s New

We’ve made a number of updates to the course—most stem directly from the experiences and suggestions of developers who took previous versions of the course. Here are some highlights of what’s new:

- Additional Programming Language Support – The course’s 8 practice labs now support Java, .Net, Python, JavaScript (for Node.js and browser)—plus the Windows and Linux operating systems.

- Balance of Concepts and Code – The updated course expands coverage of key concepts, best practices, and troubleshooting tips for AWS services to help students build a mental model before diving into code. Students then use an AWS SDK to develop apps that apply these concepts in hands-on labs.

- AWS SDK Labs – Practice labs are designed to emphasize the AWS SDK, reflecting how developers actually work and create solutions. Lab environments now include EC2 instances preloaded with all required programming language SDKs, developer tools, and IDEs. Students can simply log in and start learning!

- Relevant to More Developers – The additional programming language support helps make the course more useful to both startup and enterprise developers.

- Expanded Coverage of Developer-Oriented AWS Services – The updated course put more focus on the AWS services relevant to application development. So there’s expanded coverage of Amazon DynamoDB, plus new content on AWS Lambda, Amazon Cognito, Amazon Kinesis Streams, Amazon ElastiCache, AWS CloudFormation, and others.

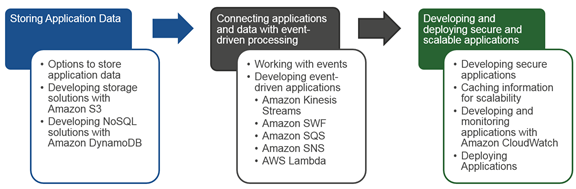

Here’s a map that will help you to understand how the course flows from topic to topic:

How to Enroll

For full course details, look over the Developing on AWS syllabus, then find a class near you. To see more AWS technical courses, visit AWS Training & Certification.

— Mike Stroh, Content & Community Manager

Thursday, January 28, 2016

DSLR-like Camera for the rumored iPhone 7

Amazon WorkSpaces Update – Support for Audio-In, High DPI Devices, and Saved Registrations

Regular readers of this blog will know that I am a huge fan of Amazon WorkSpaces. In fact, after checking my calendar, I verified that every blog post I have written in the last 10 months has been done from within my WorkSpace. Regardless of my location—office, home, or hotel room—performance, availability, and functionality have all been excellent. Until you have experienced a persistent, cloud-based desktop for yourself you won’t know what you are missing!

Today, I am pleased to be able to tell you about three new features for WorkSpaces, each designed to make the service even more useful:

- Audio-In – You can now make and receive calls from your WorkSpace using popular communication tools such as Lync, Skype, and WebEx.

- High DPI Device Support – You can now take advantage of High DPI displays found on devices like the Surface Pro 4 tablet and the Lenovo Yoga laptop.

- Saved Registration Codes – You can now save multiple registration codes in the same client application.

Audio-In

Being able to make and to receive calls from your desktop can boost your productivity. Using the newest WorkSpaces clients for Windows and Mac, you can make and receive calls using popular communication tools like Lync, Skype, and WebEx. Simply connect an analog or USB audio headset to your local client device and start making calls! This functionality is enabled for all newly launched WorkSpaces; existing WorkSpaces may need a restart. With the launch of this feature, voice communication with headsets is available to you at no additional charge in all regions where WorkSpaces are available today.

When a WorkSpace is created using a custom image, the audio-in updates are applied during the provisioning process and will take some time. To avoid this, you (or your WorkSpaces administrator) can create a new custom image after the updates have been applied to an existing WorkSpace.

High DPI Devices

To support the increasing popularity of high DPI (Full HD, Ultra HD, and QHD+) displays, we added the ability to automatically scale the in-session experience of WorkSpaces to match your local DPI settings. This means that fonts and icon sizes will match your preferred settings on high DPI devices making the WorkSpaces experience more natural. Simply use the newest WorkSpaces clients for Windows and Mac and enjoy this enhancement immediately.

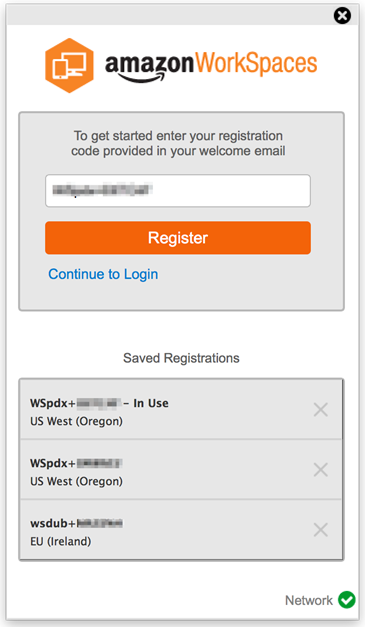

Saved Registration Codes

Many customers access multiple WorkSpaces spread across several directories and/or regions and would prefer not to have to copy and paste registration codes to make the switch. You can now save up to 10 registration codes within the client application, and switch between them with a couple of clicks. You can control all of this through the new Manage Registrations screen:

To learn more about Amazon WorkSpaces, visit the Amazon WorkSpaces page.

—Jeff;

Wednesday, January 27, 2016

New: Title-Specific Kindle Gift Cards

EMR 4.3.0 – New & Updated Applications + Command Line Export

My colleague Jon Fritz wrote the blog post below to introduce you to some new features of Amazon EMR.

— Jeff;

Today we are announcing Amazon EMR release 4.3.0, which adds support for Apache Hadoop 2.7.1, Apache Spark 1.6.0, Ganglia 3.7.2, and a new sandbox release for Presto (0.130). We have also enhanced our maximizeResourceAllocation setting for Spark and added an AWS CLI Export feature to generate a create-cluster command from the Cluster Details page in the AWS Management Console.

New Applications in Release 4.3.0

Amazon EMR provides an easy way to install and configure distributed big data applications in the Hadoop and Spark ecosystems on managed clusters of Amazon EC2 instances. You can create Amazon EMR clusters from the Amazon EMR Create Cluster Page in the AWS Management Console, AWS Command Line Interface (CLI), or using a SDK with an EMR API. In the latest release, we added support for several new versions of the following applications:

- Spark 1.6.0 – Spark 1.6.0 was released on January 4th by the Apache Foundation, and we’re excited to include it in Amazon EMR within four weeks of open source GA. This release includes several new features like compile-time type safety using the Dataset API (SPARK-9999), machine learning pipeline persistence using the Spark ML Pipeline API (SPARK-6725), a variety of new machine learning algorithms in Spark ML, and automatic memory management between execution and cache memory in executors (SPARK-10000). View the release notes or learn more about Spark on Amazon EMR.

- Presto 0.130 – Presto is an open-source, distributed SQL query engine designed for low-latency queries on large datasets in Amazon S3 and HDFS. This is a minor version release, with optimizations to SQL operations and support for S3 server-side and client-side encryption in the PrestoS3Filesystem. View the release notes or learn more about Presto on Amazon EMR.

- Hadoop 2.7.1 – This release includes improvements to and bug fixes in YARN, HDFS, and MapReduce. Highlights include enhancements to FileOutputCommitter to increase performance of MapReduce jobs with many output files (MAPREDUCE-4814) and adding support in HDFS for truncate (HDFS-3107) and files with variable-length blocks (HDFS-3689). View the release notes or learn more about Amazon EMR.

- Ganglia 3.7.2 – This release includes new features such as building custom dashboards using Ganglia Views, setting events, and creating new aggregate graphs of metrics. Learn more about Ganglia on Amazon EMR.

Enhancements to the maximizeResourceAllocation Setting for Spark

Currently, Spark on your Amazon EMR cluster uses the Apache defaults for Spark executor settings, which are 2 executors with 1 core and 1GB of RAM each. Amazon EMR provides two easy ways to instruct Spark to utilize more resources across your cluster. First, you can enable dynamic allocation of executors, which allows YARN to programmatically scale the number of executors used by each Spark application, and adjust the number of cores and RAM per executor in your Spark configuration. Second, you can specify maximizeResourceAllocation, which automatically sets the executor size to consume all of the resources YARN allocates on a node and the number of executors to the number of nodes in your cluster (at creation time). These settings create a way for a single Spark application to consume all of the available resources on a cluster. In release 4.3.0, we have enhanced this setting by automatically increasing the Apache defaults for driver program memory based on the number of nodes and node types in your cluster (more information about configuring Spark).

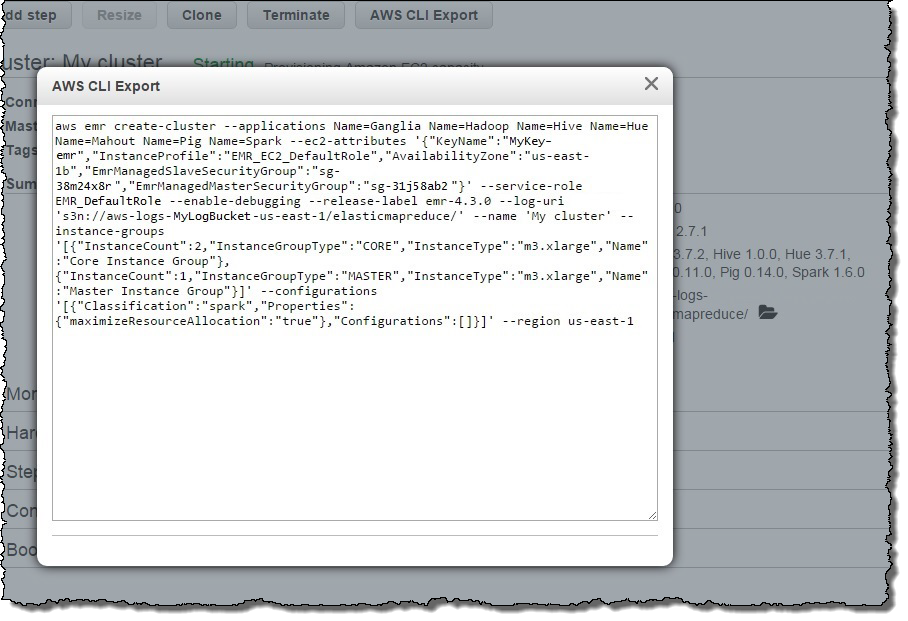

AWS CLI Export in the EMR Console

You can now generate an EMR create-cluster command representative of an existing cluster with a 4.x release using the AWS CLI Export option on the Cluster Details page in the AWS Management Console. This allows you to quickly create a cluster using the Create Cluster experience in the console, and easily generate the AWS CLI script to recreate that cluster from the AWS CLI.

Launch an Amazon EMR Cluster with Release 4.3.0 Today

To create an Amazon EMR cluster with 4.3.0, select release 4.3.0 on the Create Cluster page in the AWS Management Console, or use the release label emr-4.3.0 when creating your cluster from the AWS CLI or using a SDK with the EMR API.

— Jon Fritz, Senior Product Manager, Amazon EMR

Tuesday, January 26, 2016

A Waterproof and Dustproof iPad?

Monday, January 25, 2016

AWS Marketplace – Support for the Asia Pacific (Seoul) Region

Early in my career I worked for several companies that developed and shipped (on actual tapes) packaged software. Back in those pre-Internet days, marketing, sales, and distribution were all done on a country-by-country basis. This often involved setting up a field office and hiring local staff, both of which were expensive, time-consuming, and somewhat speculative. Providing prospective customers with time-limited access to trial copies was also difficult for many reasons including hardware and software compatibility, procurement & licensing challenges, and all of the issues that would inevitably arise during installation and configuration.

Today, the situation is a lot different. Marketing, sales, and distribution are all a lot simpler and more efficient, thanks to the Internet. For example, AWS Marketplace has streamlined the procurement process. With ready access to a very wide variety of commercial and open source software products from ISVs, customers can find what they want, buy it, and deploy it to AWS in minutes, with just a few clicks. Because many of the products in AWS Marketplace include a free trial and/or an hourly pricing option, potential large-scale users can take the products for a spin and make sure that they will satisfy their needs.

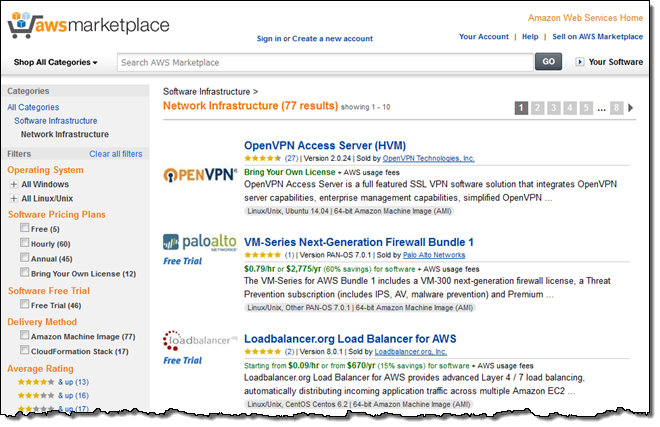

Support for the Asia Pacific (Seoul) Region

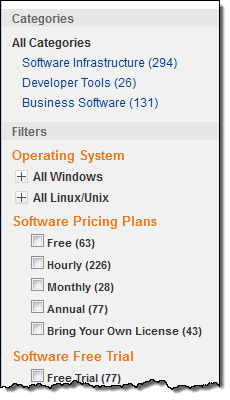

Now that the new Asia Pacific (Seoul) Region is up and running, customers located in Korea, as well as global companies serving Korean end users, can take advantage of the AWS Marketplace. There are now more than 600 products available for 1-click deploy in categories such as Network Infrastructure, Security, Storage, and Business Intelligence.

These products are available under several different pricing plans including free, hourly, monthly, and annual. For companies that already own applicable licenses for the desired products, a BYOL (Bring Your Own License) option is also available.

As I write this, more than 150 products are available for free trials in the Asia Pacific (Seoul) Region!

Several Korean ISVs have already listed their products on AWS Marketplace. Here’s a sampling:

- TMAXSoft – Tibero5 (paid AMI).

- Gruter – Enterprise Tajo (paid AMI).

- PentaSecurity – CloudBric (SaaS).

ISV Opportunities

If you are a software vendor or developer and would like to list your products in AWS Marketplace, please take a look at the Sell on AWS Marketplace information. Customers will be able to launch your products in minutes and pay for it as part of the regular AWS billing system. As a vendor of products that are available in AWS Marketplace, you will be able to discover new customers and benefit from a shorter sales cycle.

Jeff;

You can now speak to your Amazon Fire TV Stick Remote!

Saturday, January 23, 2016

New Nifty Kitchen Utensil

Thursday, January 21, 2016

AWS – Ready to Weather the Storm

As people across the Northeastern United States are stocking up their pantries and preparing their disaster supplies kits, AWS is also preparing for winter snow storms and the subsequent hurricane season. After fielding several customer requests for information about our preparation regime, my colleagues Brian Beach and Ilya Epshteyn wrote the following guest post in order to share some additional information.

— Jeff;AWS takes extensive precautions to help ensure that we will remain fully operational, with no loss of service for our hosted applications even during a major weather event or natural disaster. How reliable is an application hosted by AWS? In 2014, Nucleus Research surveyed 198 AWS customers that reported moving existing workloads from on-premises to AWS and found that they were able to reduce unplanned downtime by 32% (see Availability and Reliability in the Cloud: Amazon Web Services for more info).

AWS replicates critical system components across multiple Availability Zones to ensure high availability both under normal circumstances and during disasters such as fires, tornadoes, or floods. Our services are available to customers from 12 regions in the United States, Brazil, Europe, Japan, Singapore, Australia, Korea, and China with 32 Availability Zones. Each Availability Zone runs on its own independent infrastructure, engineered to be highly reliable so that even extreme disasters or weather events should only affect a single Availability Zone. The datacenters’ electrical power systems are designed to be fully redundant and maintainable without impact to operations. Common points of failure, such as generators, UPS units, and air conditioning, are not shared across Availability Zones.

At AWS, we plan for failure by maintaining contingency plans and regularly rehearsing our responses. In the words of Werner Vogels, Amazon’s CTO: “Everything fails, all the time.” We regularly perform preventative maintenance on our generators and UPS units to ensure that the equipment is ready when needed. We also maintain a series of incident response plans covering both common and uncommon events and update them regularly to incorporate lessons learned and prepare for emerging threats. In the days leading up to a known event such as a hurricane, we make preparations such as increasing fuel supplies, updating staffing plans, and adding provisions like food and water to ensure the safety of the support teams. Once it is clear that a storm will impact a specific region, the response plan is executed and we post updates to the Service Health Dashboard throughout the event.

During Hurricane Sandy—the most destructive hurricane of the 2012 Atlantic hurricane season, and the second-costliest hurricane in United States history— AWS remained online throughout the entire storm. An extensive Hurricane Sandy Response Plan, including 24/7 staffing by all service teams, escalation plans and continuous status updates, assured normal operations and service quality for our customers.

In fact, AWS’s highly reliable platform also played a key role in enabling a more effective storm response. A&T Systems (ATS.com), an AWS Advanced Consulting partner, used AWS in support of a statewide emergency management agency as Hurricane Sandy struck. Another AWS customer, MapBox, provided maps for several storm-related services to help predict and track Sandy’s progression, communicate evacuation plans, and track surges.

In the aftermath of the storm, some companies established operations in the AWS Cloud to replace datacenters lost to flooding and power outages. One such example is NYU’s Langone Medical Center. As noted in the article Still Recovering from Sandy, “…NYU researchers [were] able to push forward with their sequencing experiments. They were able to salvage 200 terabytes of backup sequencing data, and have set up temporary data storage in a New Jersey facility, using computing power from the NYU Center for Genomics and Systems Biology and the Amazon cloud.”

What’s even more interesting is that AWS provided a unique capability for our customers to prepare for worst case scenarios by copying and replicating their data to other AWS regions proactively. Although ultimately this was not necessary, since US East (Northern Virginia) stayed up without any issues, our customers had peace of mind that they would be able to continue their business as usual even if it did fail. One example is the Obama 2012 Campaign: in a nine hour period, they proactively replicated their entire environment from the US East (Northern Virginia) to the US West (Northern California) region, providing cross-continent fault tolerance on demand. The Obama campaign was able to copy over 27 terabytes of data from East to West in less than four hours (watch the re:Invent video, Continuous Integration and Deployment Best Practices on AWS, to learn more). Leo Zhadanovsky, a DevOps engineer for the Obama Campaign & Democratic National Committee, who now works for AWS commented that “AWS’s scalable, on-demand capacity allowed Obama for America to quickly spin up a disaster-recovery copy of their infrastructure in another region in a matter of hours — something that would normally take weeks, or months in on premise environment.”

While AWS goes to great lengths to provide availability of the cloud, our customers share responsibility for ensuring availability within the cloud. These customers and others like them have succeeded because they designed for failure and have adopted best practices for high availability, such as taking advantage of multiple Availability Zones and configuring Auto Scaling groups to replace unhealthy instances. The Building Fault-Tolerant Applications on AWS whitepaper is a great introduction to achieving high availability in the cloud. In addition, the AWS Well-Architected Framework codifies the experiences of thousands of customers, helping customers assess and improve their cloud-based architectures and mitigate disruptions.

As winter storms threaten the East Coast, AWS customers can rest assured that our Services and Availability Zones provide the most solid foundation upon which to build a reliable application. Together, we can build a highly available and resilient application in the cloud, ready to weather the storm.

— Brian Beach (Cloud Architect) and Ilya Epshteyn (Solutions Architect)

Amazon Offering Full Refunds on all Hoverboards

Wednesday, January 20, 2016

Update your Apple Watch for only $33.95!

120 Uses for Your Empty Data Center

It is big. It is cold. It is secure. And now it is empty, because you have gone all-in to the AWS Cloud. So, what do you do with your data center? Once the pride and joy of your IT staff, it is now a stark, expensive reminder that the world has changed.

Many AWS customers are migrating from their existing on-premises data centers to AWS. Here are just a few of their stories (links go to case studies, blog posts, and videos from re:Invent):

- Delaware North – This peanut and popcorn vendor reduced their server footprint by more than 90% and expects to save over $3.5 million in IT acquisition and maintenance costs over five years by using AWS.

- Seaco – This global sea-container leasing company implemented the SAP Business Suite on AWS and reduced latency by more than 90%.

- Kaplan – This education and test prep company moved a set of development, test, staging, and production environments that once spanned 12 separate data centers to AWS, eliminating 8 of the 12 in the process.

- Talen Energy – As part of a divestiture, this nuclear power company decided to move to AWS and found that they were able to focus more of their energy on their core business.

- Condé Nast – This well-known publisher migrated over 500 servers and 1 petabyte of storage to AWS and went all-in.

- Hearst Corporation – This diversified communications company migrated 10 of their 29 data centers to AWS.

- University of Notre Dame – This university has already migrated its web site to AWS and plans to move 80% of the remaining workloads in the next three years.

- Capital One – This finance company has made AWS a central part of its technology strategy.

- General Electric – This diversified company is migrating more than 9,000 workloads to AWS, while closing 30 out of 34 data centers.

If you are curious, here’s what happens when a data center closes down:

Now What?

You may be wondering what you are supposed to do with all of that cold, empty space after your migration!

With generous contributions from my colleagues (they did 85% of the work), I have compiled a list of 120 possible uses for your data center. For your reading pleasure, I have arranged them by category. As you can see, my colleagues are incredibly imaginative! There is some overlap here, but I didn’t want to play favorites. So, here you go…

Sports and Recreation

- Ice hockey rink.

- Whirlyball arena.

- Go-kart track.

- Snowshoe practice area.

- Laser tag.

- Paintball arena.

- Sweat lodge.

- Hot yoga studio.

- Immersive VR gaming arena.

- Largest paper football game. Ever.

- Portal arena.

- Paint walls black. Turn off lights. Dress in black. Water balloon fight with fluorescent paint.

- Extreme weather survival training.

- Giant domino rally.

- Venue for world’s longest paper airplane flight.

- twitch.tv live video game championship arenas.

- Bubble football arena.

- World’s largest ball pit.

- Ultimate LAN party room.

- Indoor lazy river.

- Indoor ski resort.

- Shooting range.

- All weather theme park – “Datacenter Land.”

- Segway racing track.

- Indoor surf park.

- Massive spinning studio.

- Create the World Trampoline Wallyball League (WTWL).

- Ultimate Boda Borg quest challenge.

- Indoor hang gliding wind tunnel.

- 48 state of the art gyms.

- Indoor dog park.

- Zamboni driver training facility.

Food and Beverages

- A large, cold area is perfect for growing and preserving food.

- Meat locker / meat packing facility.

- Popsicle factory.

- Wine cellar.

- Penguin sanctuary.

- Mushroom farm.

- Cheese grotto.

- Practice area for growing potatoes on Mars.

- World’s largest Easy Bake Oven.

Eminently Practical

- Classroom.

- Electric car charging station.

- Storm shelter.

- Drone zone.

- Giant pencil case.

- Bomb shelter.

- Car wash.

- Community theater.

- Art gallery.

- Secure storage for all the money saved by using AWS.

- Cloud University.

- Solar power generation plant.

- 48 really large Airbnb opportunities.

- Maker‘s den.

Space, the Final Frontier

- Blimp Hanger with “Moffett Field, You Got Nothing on Me!” painted on the side.

- UFO storage.

- Fill with water and use as a NASA zero-gravity training facility.

- Time portal for the Restaurant at the end of the Internet.

- Blue Origin space terminal with interplanetary duty free zone.

- Use racks as studio apartments in San Francisco. Rent at $5000/month.

Dead or Alive

- Homeless shelter.

- Orphanage / charity home.

- Morgue.

- Cryogenic human storage.

- Zombie apocalypse refuge.

- Cold therapy spa.

- Rehabilitation center.

- Snowman preservation facility.

Just Plain Weird

- Unicorn farm.

- Mattress testing facility.

- Biohazard isolation area.

- Stress-relief shattering emporium.

- Grow operation (where permitted by law).

- Military parade ground.

- Venue for all 2016 US presidential debates.

- Super ball testing facility.

- Corporate meditation center.

- Duck echo testing facility.

- Automated paper making factory for paper towels and toilet paper.

- Tour facility for worlds largest ball of Ethernet or fiber optic cable.

- Robot cat toy factory for Ethernet / fiber cable yarn balls.

- Storage for recently unearthed E.T. The Extra-Terrestrial game cartridges.

- Giant sensory deprivation tank.

- Biosphere 3.

- “Can you hear me now?” test facility.

TV and Movies

- Derek Zoolander Center for Kids Who Can’t Read Good.

- Battle of Hoth reenactment.

- Trash compactor where we can put people who reveal Star Wars spoilers.

- American Gladiator or American Ninja Warrior arena.

- Hangar for Death Star.

- Fill it with water. Re-enact Finding Nemo.

- Take old printers and re-enact scene from Office Space. Call it a Silent Meditation Retreat.

- Erect transparent aluminum walls, fill with water, store whales for when aliens come to contact them.

- Raiders of the Lost Ark warehouse.

- Training center for the Knights of Ren.

- Mythbusters science lab.

- Top Gear secret race track.

- Mad Max: Server Room Rampage.

- High security storage facility for broken down Daleks.

- Tardis repair center for all things wibley wobley or timey wimey.

Uniquely Amazonian

- Venue for next re:Play party.

- Amazon fulfillment center.

- Alternate venue for re:Invent 2017.

- AWS Import/Export Snowball processing facility.

- Amazon Fresh greeenhouse.

- Brewery for the Amazon Simple Beer Service.

- Amazon Locker site.

- Actual cloud storage (leave the A/C on and pipe in some steam).

It’s Dead, Jim

- Electronics shredding center.

- Warehouse used to refurbish decommissioned corporate computers/servers to deploy to underprivileged schools world wide for education.

- Venue to host auction for empty data centers.

- Server to host the auction website for empty data centers.

- Museum of data center history.

- “Co-location Data Center” – National Trust for Historic Preservation.

- Outreach centers for all those IT Admins that claimed they would never go all-in in the cloud.

- Retro-style storage for paper files.

- Data center resort with gardens grown on servers.

- Buggy whip factory.

- Museum of technology history.

PS – Please feel free to leave suggestions for additional uses in the comments.

AWS Webinars for January, 2016

Did you resolve to learn something new in 2016? If so, you should attend an AWS webinar!

Did you resolve to learn something new in 2016? If so, you should attend an AWS webinar!

Each month, we run a series of webinars that are designed to bring you up to speed on the latest AWS services & features, and to make sure that you are aware of the best ways to put them to use. The webinars are conducted by senior AWS Product Managers and Solution Architects and often include a guest speaker from our customer base.

The webinars are free but “seating” is limited and you should definitely sign up ahead of time if you want to attend (all times are Pacific). Here’s what we have in store this month:

Tuesday, January 26

There are several different ways to deploy your applications on AWS including AWS Elastic Beanstalk, AWS CodeDeploy, and Amazon EC2 Container Service. This webinar will help you to understand the strengths of each service and provide you with a framework to help you to decide which one to use.

- Webinar: Introduction to Deploying Applications on AWS (9 – 10 AM).

AWS IoT is a managed cloud platform that lets connected devices interact with cloud applications and other devices. This webinar will show you how constrained devices can send data to the cloud and receive commands back to the device.

- Webinar: Getting Started with AWS IoT (10:30 – 11:30 AM).

- Blog Post: AWS IoT – Cloud Services for Connected Devices.

AWS provides many options and tools that are a great fit for your big data needs. This webinar will provide you with an overview of the options including Hadoop, Spark, and NoSQL databases.

- Webinar: Getting Started with Big Data on AWS (Noon – 1 PM).

- Blog Post: Amazon EMR Update – Apache Spark 1.5.2, Ganglia, Presto, Zeppelin, and Oozie.

Wednesday, January 27

Amazon Aurora is a fast and cost-effective relational database designed to be compatible with MySQL.

- Webinar: Amazon Aurora for Enterprise Database Applications (9 – 10 AM).

- Blog Post: Amazon Aurora – New Cost-Effective MySQL-Compatible Database Engine for Amazon RDS.

Do you have existing data that you want to bring to the cloud? Our data migration webinar will review the options and help you to choose the one that fits your use case:

- Webinar: Cloud Data Migration: 6 Strategies for Getting Data into AWS (10:30 – 11:30 AM).

- Blog Post: AWS Import/Export Snowball – Transfer 1 Petabyte Per Week Using Amazon-Owned Storage Appliances.

Many developers are using containers to simplify the packaging, deployment, and operation of their applications. This webinar will show you how to use Amazon EC2 Container Service to simplify the use of Docker in production.

- Webinar: Introduction to Docker on AWS (Noon – 1:00 PM).

- Blog Post: Amazon EC2 Container Service (ECS) – Container Management for the AWS Cloud.

Thursday, January 28

Machine Learning helps you to extract value from data. This webinar will show you how to build machine learning models and use them to make predictions.

- Webinar: Building Smart Applications with Amazon Machine Learning (9 – 10 AM).

- Blog Post: Amazon Machine Learning – Make Data-Driven Decisions at Scale.

I am always happy to see ways that two or more AWS services can be combined to create something cool. This webinar will show you how to use AWS Lambda and the new AWS IoT service to do just that:

- Webinar: Build IoT Backends with AWS IoT & AWS Lambda (10:30 – 11:30 AM).

- Blog Posts: AWS Lambda – Run Code in the Cloud and AWS IoT – Cloud Services for Connected Devices.

The phrase “infrastructure as code” has become increasingly popular over the last couple of years. This webinar will show you how do implement this new practice using AWS:

- Webinar: Managing your Infrastructure as Code (Noon – 1 PM).

- Blog Post: New AWS Tools for Code Management and Deployment.

— Jeff;

New – GxP Compliance Resource for AWS

Ever since we launched AWS, customers have been curious about how they can use it to build and run applications that must meet many different types of regulatory requirements. For example, potential AWS users in the pharmaceutical, biotech, and medical device industries are subject to a set of guidelines and practices that are commonly known as GxP. In those industries, the x can represent Laboratory (GLP), Clinical (GCP), or Manufacturing (GMP).

These practices are intended to ensure that a product is safe and that it works as intended. Many of the practices are focused on traceability (the ability to reconstruct the development history of a drug or medical device) and accountability (the ability to learn who has contributed what to the development, and when they did it). For IT pros in regulated industries, GxP is important because it has requirements on how electronic records are stored, as well as how the systems that store these records are tested and maintained.

Because the practices became prominent at a time when static, on-premises infrastructure was the norm, companies have developed practices that made sense in this environment but not in the cloud. For example, many organizations perform point-in-time testing of their on-premises infrastructure and are not taking advantage of the all that the cloud has to offer. With the cloud, practices such as dynamic verification of configuration changes, compliance-as-code, and the use of template-driven infrastructure are easy to implement and can have important compliance benefits.

New Resource

Customers are already running GxP-workloads on AWS! In order to help speed the adoption for other pharma and medical device manufacturers, we are publishing our new GxP compliance resource today.

The GxP position paper (Considerations for Using AWS Products in GxP Systems) provides interested parties with a brief overview of AWS and of the principal services, and then focuses on a discussion of how they can be used in a GxP system. The recommendations within the paper fit in to three categories:

Quality Systems – This section addresses management, personnel, audits, purchasing controls, product assessment, supplier evaluation, supplier agreement, and records & logs.

System Development Life Cycle – This section addresses system development, validation, and operation. As I read this section of the document, it was interesting to learn how the software-defined infrastructure-as-code AWS model allows for better version control and is a great fit for GxP. The ability to use a common set of templates for development, test, and production environments that are all configured in the same way simplifies and streamlines several aspects of GxP compliance.

Regulatory Affairs – This section addresses regulatory submissions, inspections by health authorities, and personal data privacy controls.

We hired Lachman Consultants (an internationally renowned compliance consulting firm), and had them contribute to and review an earlier draft of the position paper. The version that we are publishing today reflects their feedback.

Join our Webinar

If you are interested in building cloud-based systems that must adhere to GxP, please join our upcoming GxP Webinar. Scheduled for February 23, this webinar will give you an overview of the new GxP compliance resource and will show you how AWS can facilitate GxP compliance within your organization. You’ll learn about rules-based consistency, compliance-as-code, repeatable software-based testing, and much more.

PS – The AWS Life Sciences page is another great resource!