Wednesday, August 31, 2016

Celebrate Baby Safety Month with These Top 10 Essentials

New – Run SAP HANA on Clusters of X1 Instances

My colleague Steven Jones wrote the guest post below in order to tell you about an impressive new way to use SAP HANA for large-scale workloads.

-Jeff;

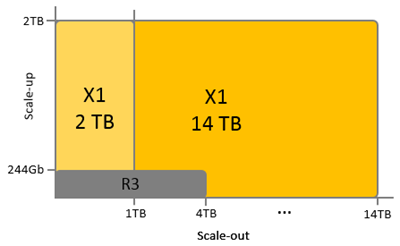

Back in May we announced the availability of our new X1 instance type x1.32xlarge, our latest addition to the Amazon EC2 memory-optimized instance family with 2 TB of RAM, purpose built for running large-scale, in-memory applications and in-memory databases like SAP HANA in the AWS cloud.

At the same time, we announced SAP certification for single-node deployments of SAP HANA on X1 and since then many AWS customers have been making use of X1 across the globe for a broad range of HANA OLTP use cases including S/4HANA, Suite on HANA, Business Warehouse on HANA, and other OLAP based BI strategies. Even so, many customers have been asking for the ability to use SAP HANA with X1 instances clustered together in scale-out fashion.

After extensive testing and benchmarking of scale-out HANA clusters in accordance with SAP's certification processes we're pleased to announce that today in conjunction with the announcement of BW/4HANA, SAP's highly optimized next generation business warehouse, our AWS X1 instances are now certified by SAP for large scale-out OLAP deployments including BW/4HANA for up to 7 nodes or 14 TB of RAM. We are excited to be able to support the launch of SAP's new flagship Business Warehouse offering BW4/HANA with new flexible, scalable, and cost effective deployment options.

After extensive testing and benchmarking of scale-out HANA clusters in accordance with SAP's certification processes we're pleased to announce that today in conjunction with the announcement of BW/4HANA, SAP's highly optimized next generation business warehouse, our AWS X1 instances are now certified by SAP for large scale-out OLAP deployments including BW/4HANA for up to 7 nodes or 14 TB of RAM. We are excited to be able to support the launch of SAP's new flagship Business Warehouse offering BW4/HANA with new flexible, scalable, and cost effective deployment options.

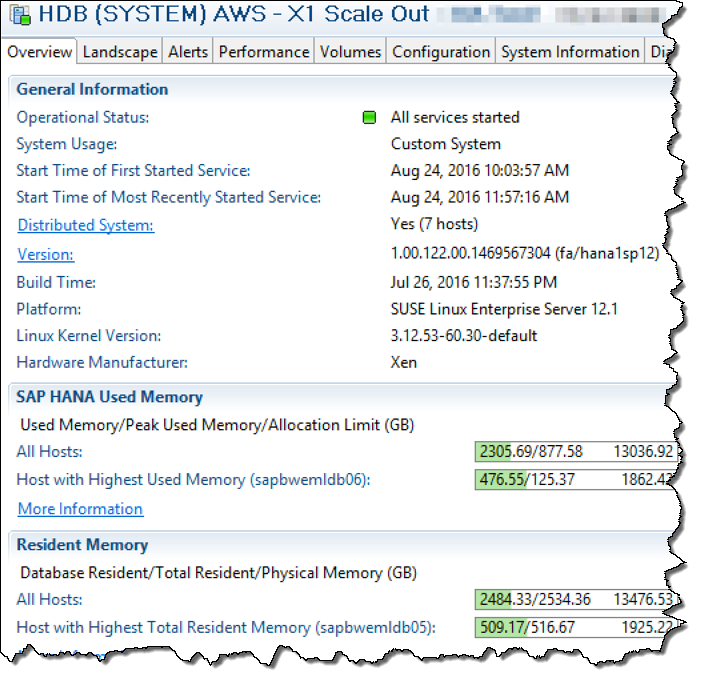

Here's a screenshot from HANA Studio showing a large (14 TB) scale-out cluster running on seven X1 instances:

And this is just the beginning; as indicated, we have plans to make X1 instances available in other sizes and we are testing even larger clusters in the range of 50 TB in our lab. If you need scale-out clusters larger than 14 TB, please contact us; we'd like to work with you.

Reduced Cost and Complexity

Many AWS customers have also been running SAP HANA in scale-out fashion across multiple R3 instances. This new certification brings the ability to consolidate larger scale-out deployments onto fewer larger instances, reducing both cost and complexity. See our SAP HANA Migration guide for details on consolidation strategies.

Flexible High-Availability Options

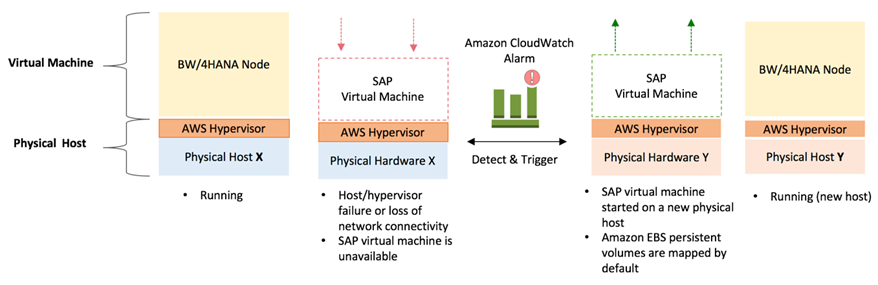

The AWS platform brings a wide variety of options depending on your needs for ensuring critical SAP HANA deployments like S/4HANA and BW/4HANA are highly available. In fact, customers who have run scale-out deployments of SAP HANA on premises, or with traditional hosting providers, tell us they often have to pay expensive maintenance contracts in addition to purchasing standby nodes or spare hardware to be able to rapidly respond to hardware failures. Others unfortunately forgo this extra hardware and hope nothing happens.

One particularly useful option customers are leveraging on AWS platform is a solution called Amazon EC2 Auto Recovery. Customers simply create an Amazon CloudWatch alarm that monitors their EC2 instance(s) which automatically recovers the instance to a healthy host if it becomes impaired due to an underlying hardware failure or a problem that requires AWS involvement to repair. A recovered instance is identical to the original instance, including attached EBS storage volumes as well as other configurations such as hostname, IP address, and AWS instance IDs. Standard pricing for Amazon CloudWatch applies (for example $0.10 per alarm per month us-east). Essentially this allows you to leverage our spare capacity for rapid recovery while we take care of the unhealthy hardware.

Getting Started

You can deploy your own production ready single-node HANA or scale-out HANA solution on X1 using the updated AWS Quick Start Reference Deployment for SAP HANA in less than an hour using well-tested configurations.

Be sure to also review our SAP HANA Implementation and Operations Guide for other guidance and best practices when planning your SAP HANA implementation on Amazon Web Services.

Are you in the Bay Area on September 7 and want to join us for an exciting AWS and SAP announcement? Register here and we'll see you in San Francisco!

Can't make it? Join our livestream on September 7 at 9 AM PST and learn how AWS and SAP are working together to provide value for SAP customers.

We look forward to serving you.

- Steven Jones, Senior Manager, AWS Solutions Architecture

Tuesday, August 30, 2016

Sonos is Teaming Up with Amazon to Bring Voice Control Feature to Its Speakers

AWS Hot Startups – August 2016

Back with her second guest post, Tina Barr talks about four more hot startups!

-Jeff;

This month we are featuring four hot AWS-powered startups:

- Craftsvilla – Offering a platform to purchase ethnic goods.

- SendBird – Helping developers build 1-on-1 messaging and group chat quickly.

- Teletext.io – A solution for content management, without the system.

- Wavefront – A cloud-based analytics platform.

Craftsvilla

Craftsvilla was born in 2011 out of sheer love and appreciation for the crafts, arts, and culture of India. On a road trip through the Gujarat region of western India, Monica and Manoj Gupta were mesmerized by the beautiful creations crafted by local artisans. However, they were equally dismayed that these artisans were struggling to make ends meet. Monica and Manoj set out to create a platform where these highly skilled workers could connect directly with their consumers and reach a much broader audience. The demand for authentic ethnic products is huge across the globe, but consumers are often unable to find the right place to buy them. Craftsvilla helps to solve this issue.

Craftsvilla was born in 2011 out of sheer love and appreciation for the crafts, arts, and culture of India. On a road trip through the Gujarat region of western India, Monica and Manoj Gupta were mesmerized by the beautiful creations crafted by local artisans. However, they were equally dismayed that these artisans were struggling to make ends meet. Monica and Manoj set out to create a platform where these highly skilled workers could connect directly with their consumers and reach a much broader audience. The demand for authentic ethnic products is huge across the globe, but consumers are often unable to find the right place to buy them. Craftsvilla helps to solve this issue.

The culture of India is so rich and diverse, that no one had attempted to capture it on a single platform. Using technological innovations, Craftsvilla combines apparel, accessories, health and beauty products, food items and home décor all in one easily accessible space. For instance, they not only offer a variety of clothing (Salwar suits, sarees, lehengas, and casual wear) but each of those categories are further broken down into subcategories. Consumers can find anything that fits their needs – they can filter products by fabric, style, occasion, and even by the type of work (embroidered, beads, crystal work, handcrafted, etc.). If you are interested in trying new cuisine, Craftsvilla can help. They offer hundreds of interesting products from masalas to traditional sweets to delicious tea blends. They even give you the option to filter through India's many diverse regions to discover new foods.

Becoming a seller on Craftsvilla is simple. Shop owners just need to create a free account and they're able to start selling their unique products and services. Craftsvilla's ultimate vision is to become the 'one-stop destination' for all things ethnic. They look to be well on their way!

AWS itself is an engineer on Craftsvilla's team. Customer experience is highly important to the people behind the company, and an integral aspect of their business is to attain scalability with efficiency. They automate their infrastructure at a large scale, which wouldn't be possible at the current pace without AWS. Currently, they utilize over 20 AWS services – Amazon Elastic Compute Cloud (EC2), Elastic Load Balancing, Amazon Kinesis, AWS Lambda, Amazon Relational Database Service (RDS), Amazon Redshift, and Amazon Virtual Private Cloud to name a few. Their app QA process will move to AWS Device Farm, completely automated in the cloud, on 250+ services thanks to Lambda. Craftsvilla relies completely on AWS for all of their infrastructure needs, from web serving to analytics.

Check out Craftsvilla's blog for more information!

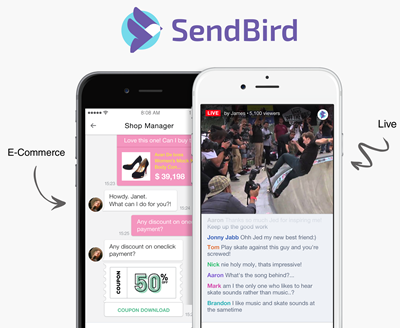

SendBird

After successfully exiting their first startup, SendBird founders John S. Kim, Brandon Jeon, Harry Kim, and Forest Lee saw a great market opportunity for a consumer app developer. Today, over 2,000 global companies such as eBay, Nexon, Beat, Malang Studio, and SK Telecom are using SendBird to implement chat and messaging capabilities on their mobiles apps and websites. A few ways companies are using SendBird:

After successfully exiting their first startup, SendBird founders John S. Kim, Brandon Jeon, Harry Kim, and Forest Lee saw a great market opportunity for a consumer app developer. Today, over 2,000 global companies such as eBay, Nexon, Beat, Malang Studio, and SK Telecom are using SendBird to implement chat and messaging capabilities on their mobiles apps and websites. A few ways companies are using SendBird:

- 1-on-1 messaging for private messaging and conversational commerce.

- Group chat for friends and interest groups.

- Massive scale chat rooms for live-video streams and game communities.

As they watched messaging become a global phenomenon, the SendBird founders realized that it no longer made sense for app developers to build their entire tech stack from scratch. Research from the Localytics Data Team actually shows that in-app messaging can increase app launches by 27% and engagement by 3 times. By simply downloading the SendBird SDK (available for iOS, Android, Unity, .NET Xamarin, and JavaScript), app and web developers can implement real-time messaging features in just minutes. SendBird also provides a full chat history and allows users to send chat messages in addition to complete file and data transfers. Moreover, developers can integrate innovative features such as smart-throttling to control the speed of messages being displayed to the mobile devices during live broadcasting.

After graduating from accelerator Y Combinator W16 Batch, the company grew from 1,000,000 monthly chat users to 5,000,000 monthly chat users within months while handling millions of new messages daily across live-video streaming, games, ecommerce, and consumer apps. Customers found value in having a cross-platform, full-featured, and whole-stack approach to a real-time chat API and SDK which can be deployed in a short period of time.

SendBird chose AWS to build a robust and scalable infrastructure to handle a massive concurrent user base scattered across the globe. It uses EC2 with Elastic Load Balancing and Auto Scaling, Route 53, S3, ElastiCache, Amazon Aurora, CloudFront, CloudWatch, and SNS. The company expects to continue partnering with AWS to scale efficiently and reliably.

Check out SendBird and their blog to follow their journey!

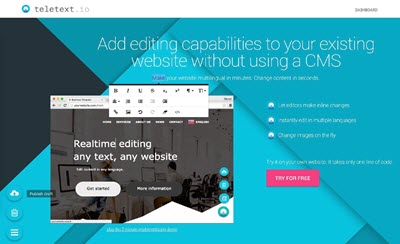

Teletext.io

Marcel Panse and Sander Nagtegaal, co-founders of Teletext.io, had worked together at several startups and experienced the same problem at each one: within the scope of custom software development, content management is a big pain. Even the smallest correction, such as a typo, typically requires a developer, which can become very expensive over time. Unable to find a proper solution that was readily available, Marcel and Sander decided to create their own service to finally solve the issue. Leveraging only the API Gateway, Lambda functions, Amazon DynamoDB, S3, and CloudFront, they built a drop-in content management service (CMS). Their serverless approach for a CMS alternative quickly attracted other companies, and despite intending to use it only for their own needs, the pair decided to professionally market their idea and Teletext.io was born.

Marcel Panse and Sander Nagtegaal, co-founders of Teletext.io, had worked together at several startups and experienced the same problem at each one: within the scope of custom software development, content management is a big pain. Even the smallest correction, such as a typo, typically requires a developer, which can become very expensive over time. Unable to find a proper solution that was readily available, Marcel and Sander decided to create their own service to finally solve the issue. Leveraging only the API Gateway, Lambda functions, Amazon DynamoDB, S3, and CloudFront, they built a drop-in content management service (CMS). Their serverless approach for a CMS alternative quickly attracted other companies, and despite intending to use it only for their own needs, the pair decided to professionally market their idea and Teletext.io was born.

Today, Teletext.io is called a solution for content management, without the system. Content distributors are able to edit text and images through a WYSIWYG editor without the help of a programmer and directly from their own website or user interface. There are just three easy steps to get started:

- Include Teletext.io script.

- Add data attributes.

- Login and start typing.

That's it! There is no system that needs to be installed or maintained by developers – Teletext.io works directly out of the box. In addition to recurring content updates, the data attribution technique can also be used for localization purposes. Making a website multilingual through a CMS can take days or weeks, but Teletext.io can accomplish this task in mere minutes. The time-saving factor is the main benefit for developers and editors alike.

Teletext.io uses AWS in a variety of ways. Since the company is responsible for the website content of others, they must have an extremely fast and reliable system that keeps website visitors from noticing external content being loaded. In addition, this critical infrastructure service should never go down. Both of these requirements call for a robust architecture with as few moving parts as possible. For these reasons, Teletext.io runs a serverless architecture that really makes it stand out. For loading draft content, storing edits and images, and publishing the result, the Amazon API Gateway gets called, triggering AWS Lambda functions. The Lambda functions store their data in Amazon DynamoDB.

Read more about Teletext.io's unique serverless approach in their blog post.

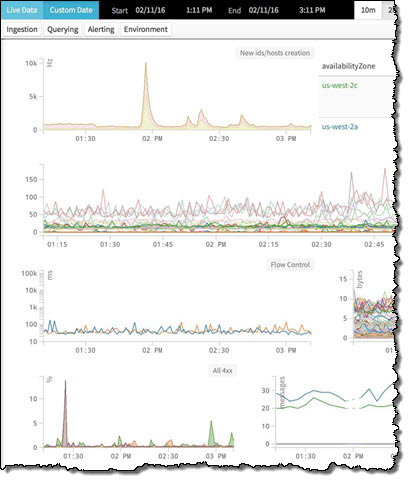

Wavefront

Founded in 2013 and based in Palo Alto, Wavefront is a cloud-based analytics platform that stores time series data at millions of points per second. They are able to detect any divergence from “normal” in hybrid and cloud infrastructures before anomalies ever happen. This is a critical service that companies like Lyft, Okta, Yammer, and Box are using to keep running smoothly. From data scientists to product managers, from startups to Fortune 500 companies, Wavefront offers a powerful query engine and a language designed for everyone.

Founded in 2013 and based in Palo Alto, Wavefront is a cloud-based analytics platform that stores time series data at millions of points per second. They are able to detect any divergence from “normal” in hybrid and cloud infrastructures before anomalies ever happen. This is a critical service that companies like Lyft, Okta, Yammer, and Box are using to keep running smoothly. From data scientists to product managers, from startups to Fortune 500 companies, Wavefront offers a powerful query engine and a language designed for everyone.

With a pay-as-you-go model, Wavefront gives customers the flexibility to start with the necessary application size and scale up/down as needed. They also include enterprise-class support as part of their pricing at no extra cost. Take a look at their product demos to learn more about how Wavefront is helping their customers.

The Wavefront Application is hosted entirely on AWS, and runs its single-tenant instances and multi-tenant instances in the virtual private cloud (VPC) clusters within AWS. The application has deep, native integrations with CloudWatch and CloudTrail, which benefits many of its larger customers also using AWS. Wavefront uses AWS to create a “software problem”, to operate, automate and monitor clouds using its own application. Most importantly, AWS allows Wavefront to focus on its core business – to build the best enterprise cloud monitoring system in the world.

To learn more about Wavefront, check out their blog post, How Does Wavefront Work!

Monday, August 29, 2016

Amazon Launches a Hub Dedicated for All your Car Needs

AWS Week in Review – August 22, 2016

Here's the first community-driven edition of the AWS Week in Review. In response to last week's blog post (AWS Week in Review – Coming Back With Your Help!), 9 other contributors helped to make this post a reality. That's a great start; let's see if we can go for 20 this week.

New & Notable Open Source

- cloudhopper is a framework for deploying your APIs with AWS Lambda.

- metalsmith-cloudfront is a Metalsmith plugin for invalidating Amazon CloudFront distributions.

- aws-lambda-container-monitoring monitors the number of active AWS Lambda containers and reports the results to Amazon CloudWatch.

New SlideShare Presentations

- Amazon CloudFront Office Hours – Using Amazon CloudFront with S3 & ELB

- Deep Dive on Amazon S3

- Data Storage for the Long Haul: Compliance and Archive

- Deep Dive on Amazon Aurora

- Deep Dive on Amazon DynamoDB

- Deep Dive on Amazon S3

- Amazon Aurora for Enterprise Database Applications

Upcoming Events

- August 29 (Oslo, Norway) – Practical encryption of content data using AWS Key Management Service (AWS User Group Norway)

- August 31 (Seattle, WA) – Building Smart Healthcare Applications on AWS

- September 6 (Dublin, Ireland) – Developing with Amazon Alexa – Building Voice Experiences for Services & Devices.

Help Wanted

Stay tuned for next week, and please consider helping to make this a community-driven effort!

- Jeff;

Amazon Launches the “Featured On Product Hunt” Collection

Thursday, August 25, 2016

Amazon Baby Registry

Wednesday, August 24, 2016

Amazon Debuts its Kindle Reading Fund Program

Tuesday, August 23, 2016

Build the Wedding Registry of Your Dreams

AWS Week in Review – Coming Back With Your Help!

Back in 2012 I realized that something interesting happened in AWS-land just about every day. In contrast to the periodic bursts of activity that were the norm back in the days of shrink-wrapped software, the cloud became a place where steady, continuous development took place.

In order to share all of this activity with my readers and to better illustrate the pace of innovation, I published the first AWS Week in Review in the spring of 2012. The original post took all of about 5 minutes to assemble, post and format. I got some great feedback on it and I continued to produce a steady stream of new posts every week for over 4 years. Over the years I added more and more content generated within AWS and from the ever-growing community of fans, developers, and partners.

In order to share all of this activity with my readers and to better illustrate the pace of innovation, I published the first AWS Week in Review in the spring of 2012. The original post took all of about 5 minutes to assemble, post and format. I got some great feedback on it and I continued to produce a steady stream of new posts every week for over 4 years. Over the years I added more and more content generated within AWS and from the ever-growing community of fans, developers, and partners.

Unfortunately, finding, saving, and filtering links, and then generating these posts grew to take a substantial amount of time. I reluctantly stopped writing new posts early this year after spending about 4 hours on the post for the week of April 25th.

After receiving dozens of emails and tweets asking about the posts, I gave some thought to a new model that would be open and more scalable.

Going Open

The AWS Week in Review is now a GitHub project (https://github.com/aws/aws-week-in-review). I am inviting contributors (AWS fans, users, bloggers, and partners) to contribute.

Every Monday morning I will review and accept pull requests for the previous week, aiming to publish the Week in Review by 10 AM PT. In order to keep the posts focused and highly valuable, I will approve pull requests only if they meet our guidelines for style and content.

At that time I will also create a file for the week to come, so that you can populate it as you discover new and relevant content.

Content & Style Guidelines

Here are the guidelines for making contributions:

- Relevance -All contributions must be directly related to AWS.

- Ownership – All contributions remain the property of the contributor.

- Validity – All links must be to publicly available content (links to free, gated content are fine).

- Timeliness – All contributions must refer to content that was created on the associated date.

- Neutrality – This is not the place for editorializing. Just the facts / links.

I generally stay away from generic news about the cloud business, and I post benchmarks only with the approval of my colleagues.

And now a word or two about style:

- Content from this blog is generally prefixed with “I wrote about POST_TITLE” or “We announced that TOPIC.”

- Content from other AWS blogs is styled as “The BLOG_NAME wrote about POST_TITLE.”

- Content from individuals is styled as “PERSON wrote about POST_TITLE.”

- Content from partners and ISVs is styled as “The BLOG_NAME wrote about POST_TITLE.”

There's room for some innovation and variation to keep things interesting, but keep it clean and concise. Please feel free to review some of my older posts to get a sense for what works.

Over time we might want to create a more compelling visual design for the posts. Your ideas (and contributions) are welcome.

Sections

Over the years I created the following sections:

- Daily Summaries – content from this blog, other AWS blogs, and everywhere else.

- New & Notable Open Source.

- New SlideShare Presentations.

- New YouTube Videos including APN Success Stories.

- New AWS Marketplace products.

- New Customer Success Stories.

- Upcoming Events.

- Help Wanted.

Some of this content comes to my attention via RSS feeds. I will post the OPML file that I use in the GitHub repo and you can use it as a starting point. The New & Notable Open Source section is derived from a GitHub search for aws. I scroll through the results and pick the 10 or 15 items that catch my eye. I also watch /r/aws and Hacker News for interesting and relevant links and discussions.

Over time, it is possible that groups or individuals may become the primary contributor for a section. That's fine, and I would be thrilled to see this happen. I am also open to the addition to new sections, as long as they are highly relevant to AWS.

Automation

Earlier this year I tried to automate the process, but I did not like the results. You are welcome to give this a shot on your own. I do want to make sure that we continue to exercise human judgement in order to keep the posts as valuable as possible.

Let's Do It

I am super excited about this project and I cannot wait to see those pull requests coming in. Please let me know (via a blog comment) if you have any suggestions or concerns.

I should note up front that I am very new to Git-based collaboration and that this is going to be a learning exercise for me. Do not hesitate to let me know if there's a better way to do things!

-Jeff;

Monday, August 22, 2016

Amazon's Plan for an Echo-Only Music Subscription Service

Sunday, August 21, 2016

Rent Virtual Desktops through Amazon Workspaces

Thursday, August 18, 2016

Amazon WorkSpaces Update – Hourly Usage and Expanded Root Volume

In my recent post, I Love My Amazon WorkSpace, I shared the story of how I became a full-time user and big fan of Amazon WorkSpaces. Since writing the post I have heard similar sentiments from several other AWS customers.

Today I would like to tell you about some new and recent developments that will make WorkSpaces more economical, more flexible, and more useful:

- Hourly WorkSpaces – You can now pay for your WorkSpace by the hour.

- Expanded Root Volume – Newly launched WorkSpaces now have an 80 GB root volume.

Let's take a closer look at these new features.

Hourly WorkSpaces

If you only need part-time access to your WorkSpace, you (or your organization, to be more precise) will benefit from this feature. In addition to the existing monthly billing, you can now use and pay for a WorkSpace on an hourly basis, allowing you to save money on your AWS bill. If you are a part-time employee, a road warrior, share your job with another part-timer, or work on multiple short-term projects, this feature is for you. It is also a great fit for corporate training, education, and remote administration.

There are now two running modes – AlwaysOn and AutoStop:

- AlwaysOn – This is the existing mode. You have instant access to a WorkSpace that is always running, billed by the month.

- AutoStop – This is new. Your WorkSpace starts running and billing when you log in, and stops automatically when you remain disconnected for a specified period of time.

A WorkSpace that is running in AutoStop mode will automatically stop a predetermined amount of time after you disconnect (1 to 48 hours). Your WorkSpaces Administrator can also force a running WorkSpace to stop. When you next connect, the WorkSpace will resume, with all open documents and running programs intact. Resuming a stopped WorkSpace generally takes less than 90 seconds.

Your WorkSpaces Administrator has the ability to choose your running mode when launching your WorkSpace:

The Administrator can change the AutoStop time and the running mode at any point during the month. They can also track the number of working hours that your WorkSpace accumulates during the month using the new UserConnected CloudWatch metric, and switch from AutoStop to AlwaysOn when this becomes more economical. Switching from hourly to monthly billing takes place upon request; however, switching the other way takes place at the the start of the following month.

All new Amazon WorkSpaces can take advantage of hourly billing today. If you're using a custom image for your WorkSpaces, you'll need to refresh your custom images from the latest Amazon WorkSpaces bundles. The ability for existing WorkSpaces to switch to hourly billing will be added in the future.

To learn more about pricing for hourly WorkSpaces, visit the WorkSpaces Pricing page.

Expanded Root Volume

By popular demand we have expanded the size of the root volume for newly launched WorkSpaces to 80 GB, allowing you to run more applications and store more data at no additional cost. Your WorkSpaces Administrator can rebuild existing WorkSpaces in order to upgrade them to the larger root volumes (read Rebuild a WorkSpace to learn more). Rebuilding a WorkSpace will restore the root volume (C:) to the most recent image of the bundle that was used to create the WorkSpace. It will also restore the data volume (D:) from the last automatic snapshot.

Some WorkSpaces Resources

While I have your attention, I would like to let you know about a couple of other important WorkSpaces resources:

- Getting Started – Our WorkSpaces Getting Started page includes a new step-by-step WorkSpaces Implementation Guide and other handy documentation.

- Recorded Webinar – Late last month my colleague Salman Paracha delivered the Intro to Amazon WorkSpaces webinar. Watch it to learn how WorkSpaces can be used to support a diverse and dynamic global workforce and improve your organization's security position while providing users with a familiar and productive desktop experience.

- Whitepapers – Our new Best Practices for Deploying Amazon WorkSpaces whitepaper addresses network considerations, directory services for user authentication, security, monitoring, and logging. The Desktop-as-a-Service whitepaper reviews the greater operational control, reduced costs, and security benefits of WorkSpaces.

- Case Studies – The Louisiana Department of Corrections and Endemol Shine Group case studies will show you how AWS customers are putting WorkSpaces to use.

Available Now

The features that I described above are available now and you can start using them today!

Jeff;

Top 10 Toys Your Kids Will Want this Christmas

Target Sells Amazon Gadgets Again After a Four-Year Hiatus

Tuesday, August 16, 2016

Discounted Deals on Kindle, Fire and Echo

Monday, August 15, 2016

The Coolest Tech Tools for Back to School

Sunday, August 14, 2016

The Amazing Instant Pot

Friday, August 12, 2016

The Latest News on the Apple Watch 2

Thursday, August 11, 2016

AWS Webinars – August, 2016

Everyone on the AWS team understands the value of educating our customers on the best ways to use our services. We work hard to create documentation, training materials, and blog posts for you! We run live events such as our Global AWS Summits and AWS re:Invent where the focus is on education. Last but not least, we put our heads together and create a fresh lineup of webinars for you each and every month.

Everyone on the AWS team understands the value of educating our customers on the best ways to use our services. We work hard to create documentation, training materials, and blog posts for you! We run live events such as our Global AWS Summits and AWS re:Invent where the focus is on education. Last but not least, we put our heads together and create a fresh lineup of webinars for you each and every month.

We have a great selection of webinars on the schedule for August. As always they are free, but they do fill up and I strongly suggest that you register ahead of time. All times are PT, and each webinar runs for one hour:

August 23

- 9:00 AM – Introducing Amazon EMR Release 5.0: Faster, Easier, Hadoop, Spark, and Presto.

- 10:30 AM – Best Practices for Data Center Migration Planning.

- Noon – Best Practices for Running SAP HANA Workloads with Amazon EC2 X1 Instances.

August 24

- 9:00 AM – Amazon Aurora for the Enterprise: Lower Cost, Better Performance.

- 10:30 AM – Getting Started with Serverless Architectures.

- Noon – Best Practices for Building a Data Lake with Amazon S3.

August 25

- 9:00 AM – Managing IoT and Time Series Data with Amazon ElastiCache for Redis.

- 10:30 AM – Running Microservices and Docker on AWS Elastic Beanstalk.

- Noon – Getting Started with Microsoft SQL Server 2016 on Amazon EC2.

August 30

- 9:00 AM – Introduction to Amazon Kinesis Firehose.

- 10:30 AM – Building Serverless Chat Bots.

- Noon – Continuous Delivery to Amazon ECS.

August 31

- 9:00 AM – Stream Data Analytics with Amazon Kinesis Firehose & Redshift.

- 10:30 AM – Getting Started with AWS Device Farm.

- Noon – AWS IoT Button.

Jeff;

PS – Check out the AWS Webinar Archive for more great content!

AWS Solution – Transit VPC

Today I would like to tell you about a new AWS Solution. This one is cool because of what it does and how it works! Like the AWS Quick Starts, this one was built by AWS Solutions Architects and incorporates best practices for security and high availability.

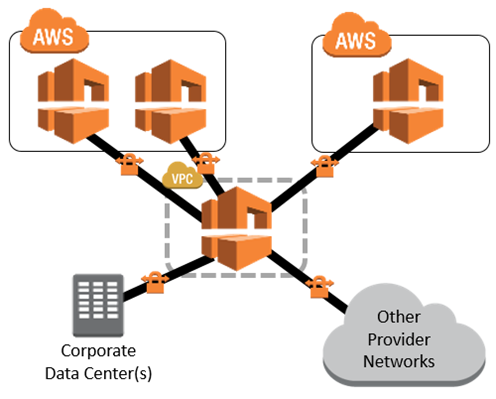

The new Transit VPC Solution shows you how to implement a very useful networking construct that we call a transit VPC. You can use this to connect multiple Virtual Private Clouds (VPCs) that might be geographically disparate and/or running in separate AWS accounts, to a common VPC that serves as a global network transit center. This network topology simplifies network management and minimizes the number of connections that you need to set up and manage. Even better, it is implemented virtually and does not require any physical network gear or a physical presence in a colocation transit hub. Here's what this looks like:

In this diagram, the transit VPC is central, surrounded by additional “spoke” VPCs, corporate data centers, and other networks.

The transit VPC supports several important use cases:

- Private Networking – You can build a private network that spans two or more AWS Regions.

- Shared Connectivity – Multiple VPCs can share connections to data centers, partner networks, and other clouds.

- Cross-Account AWS Usage – The VPCs and the AWS resources within them can reside in multiple AWS accounts.

The solution uses a AWS CloudFormation stack to launch and configure all of the AWS resources. It provides you with three throughput options ranging from 500 Mbps to 2 Gbps, each implemented over a pair of connections for high availability. The stack makes use of the Cisco Cloud Services Router (CSR), which is now available in AWS Marketplace. You can use your existing CSR licenses (the BYOL model) or you can pay for your CSR usage on an hourly basis. The cost to run a transit VPC is based on the throughput option and licensing model that you choose, and ranges from $0.21 to $8.40 per hour, with an additional cost (for AWS resources) of $0.10 per hour for each spoke VPC. There's an additional cost of $1 per month for a AWS Key Management Service (KMS) customer master key that is specific to the solution. All of these prices are exclusive of network transit costs.

The template installs and uses a pair of AWS Lambda functions in a creative way!

The VGW Poller function runs every minute. It scans all of the AWS Regions in the account, looking for appropriately tagged Virtual Private Gateways in spoke VPCs that do not have a VPN connection. When it finds one, it creates (if necessary) the corresponding customer gateway and the VPN connections to the CSR, and then saves the information in an S3 bucket.

The Cisco Configurator function is triggered by the Put event on the bucket. It parses the VPN connection information and generates the necessary config files, then pushes them to the CSR instances using SSH. This allows the VPN tunnels to come up and (via the magic of BGP), neighbor relationships will be established with the spoke VPCs.

By using Lambda in this way, new spoke VPCs can be brought online quickly without the overhead of keeping an underutilized EC2 instance up and running.

The solution's implementation guide, as always, contains step-by-step directions and security recommendations.

-Jeff;

PS – Check out additional network best practice guidance to find answers to common network questions!